Welcome to Envoy Gateway

Envoy Gateway Documents

Note

This project is under

active development. Many features are not complete. We would love for you to

Get Involved!

Envoy Gateway is an open source project for managing Envoy Proxy as a standalone or Kubernetes-based application

gateway. Gateway API resources are used to dynamically provision and configure the managed Envoy Proxies.

Ready to get started?

1 - Design

This section includes Designs of Envoy Gateway.

1.1 - Goals

The high-level goal of the Envoy Gateway project is to attract more users to Envoy by lowering barriers to adoption

through expressive, extensible, role-oriented APIs that support a multitude of ingress and L7/L4 traffic routing

use cases; and provide a common foundation for vendors to build value-added products without having to re-engineer

fundamental interactions.

Objectives

Expressive API

The Envoy Gateway project will expose a simple and expressive API, with defaults set for many capabilities.

The API will be the Kubernetes-native Gateway API, plus Envoy-specific extensions and extension points. This

expressive and familiar API will make Envoy accessible to more users, especially application developers, and make Envoy

a stronger option for “getting started” as compared to other proxies. Application developers will use the API out of

the box without needing to understand in-depth concepts of Envoy Proxy or use OSS wrappers. The API will use familiar

nouns that users understand.

The core full-featured Envoy xDS APIs will remain available for those who need more capability and for those who

add functionality on top of Envoy Gateway, such as commercial API gateway products.

This expressive API will not be implemented by Envoy Proxy, but rather an officially supported translation layer

on top.

Batteries included

Envoy Gateway will simplify how Envoy is deployed and managed, allowing application developers to focus on

delivering core business value.

The project plans to include additional infrastructure components required by users to fulfill their Ingress and API

gateway needs: It will handle Envoy infrastructure provisioning (e.g. Kubernetes Service, Deployment, et cetera), and

possibly infrastructure provisioning of related sidecar services. It will include sensible defaults with the ability to

override. It will include channels for improving ops by exposing status through API conditions and Kubernetes status

sub-resources.

Making an application accessible needs to be a trivial task for any developer. Similarly, infrastructure administrators

will enjoy a simplified management model that doesn’t require extensive knowledge of the solution’s architecture to

operate.

All environments

Envoy Gateway will support running natively in Kubernetes environments as well as non-Kubernetes deployments.

Initially, Kubernetes will receive the most focus, with the aim of having Envoy Gateway become the de facto

standard for Kubernetes ingress supporting the Gateway API.

Additional goals include multi-cluster support and various runtime environments.

Extensibility

Vendors will have the ability to provide value-added products built on the Envoy Gateway foundation.

It will remain easy for end-users to leverage common Envoy Proxy extension points such as providing an implementation

for authentication methods and rate-limiting. For advanced use cases, users will have the ability to use the full power

of xDS.

Since a general-purpose API cannot address all use cases, Envoy Gateway will provide additional extension points

for flexibility. As such, Envoy Gateway will form the base of vendor-provided managed control plane solutions,

allowing vendors to shift to a higher management plane layer.

Non-objectives

Cannibalize vendor models

Vendors need to have the ability to drive commercial value, so the goal is not to cannibalize any existing vendor

monetization model, though some vendors may be affected by it.

Disrupt current Envoy usage patterns

Envoy Gateway is purely an additive convenience layer and is not meant to disrupt any usage pattern of any user

with Envoy Proxy, xDS, or go-control-plane.

Personas

In order of priority

1. Application developer

The application developer spends the majority of their time developing business logic code. They require the ability to

manage access to their application.

2. Infrastructure administrators

The infrastructure administrators are responsible for the installation, maintenance, and operation of

API gateways appliances in infrastructure, such as CRDs, roles, service accounts, certificates, etc.

Infrastructure administrators support the needs of application developers by managing instances of Envoy Gateway.

1.2 - System Design

Goals

- Define the system components needed to satisfy the requirements of Envoy Gateway.

Non-Goals

- Create a detailed design and interface specification for each system component.

Terminology

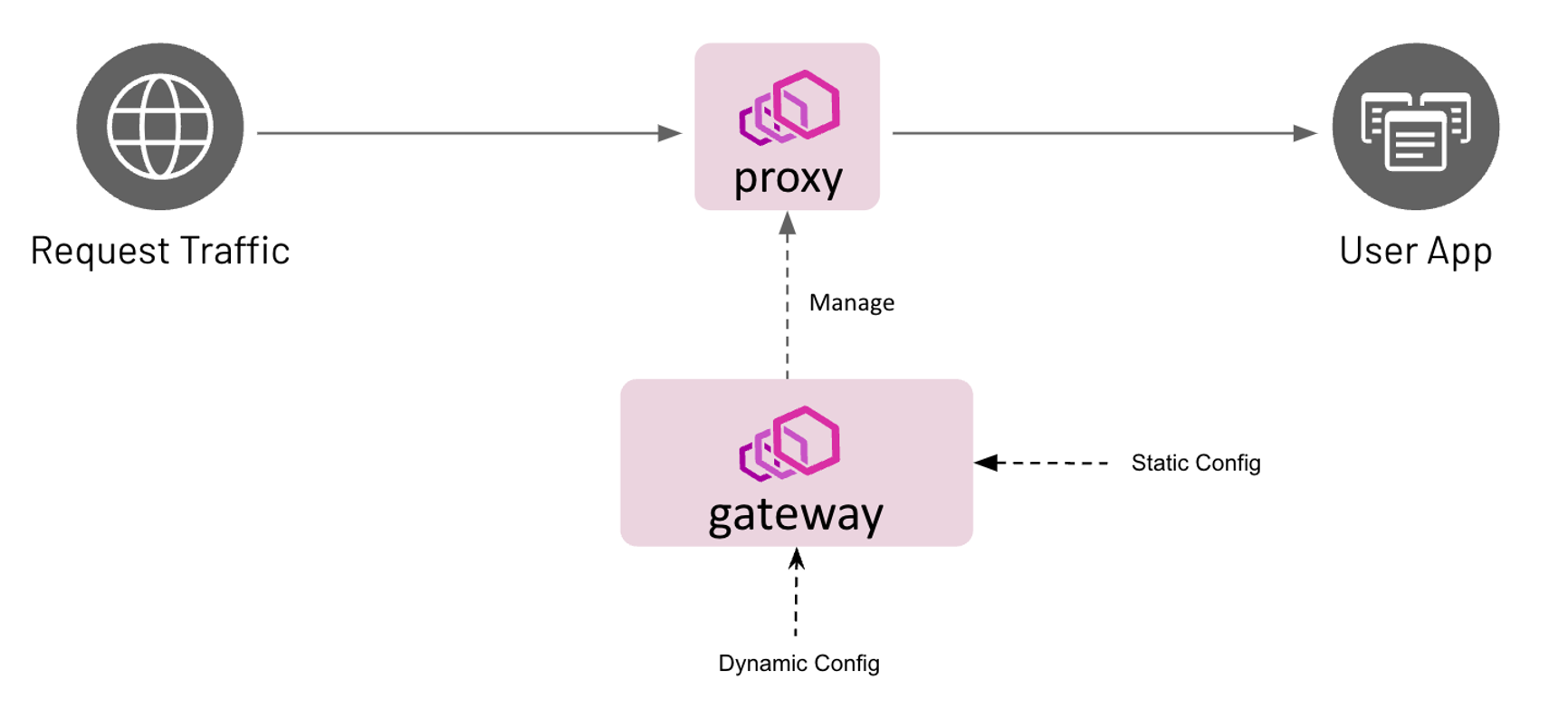

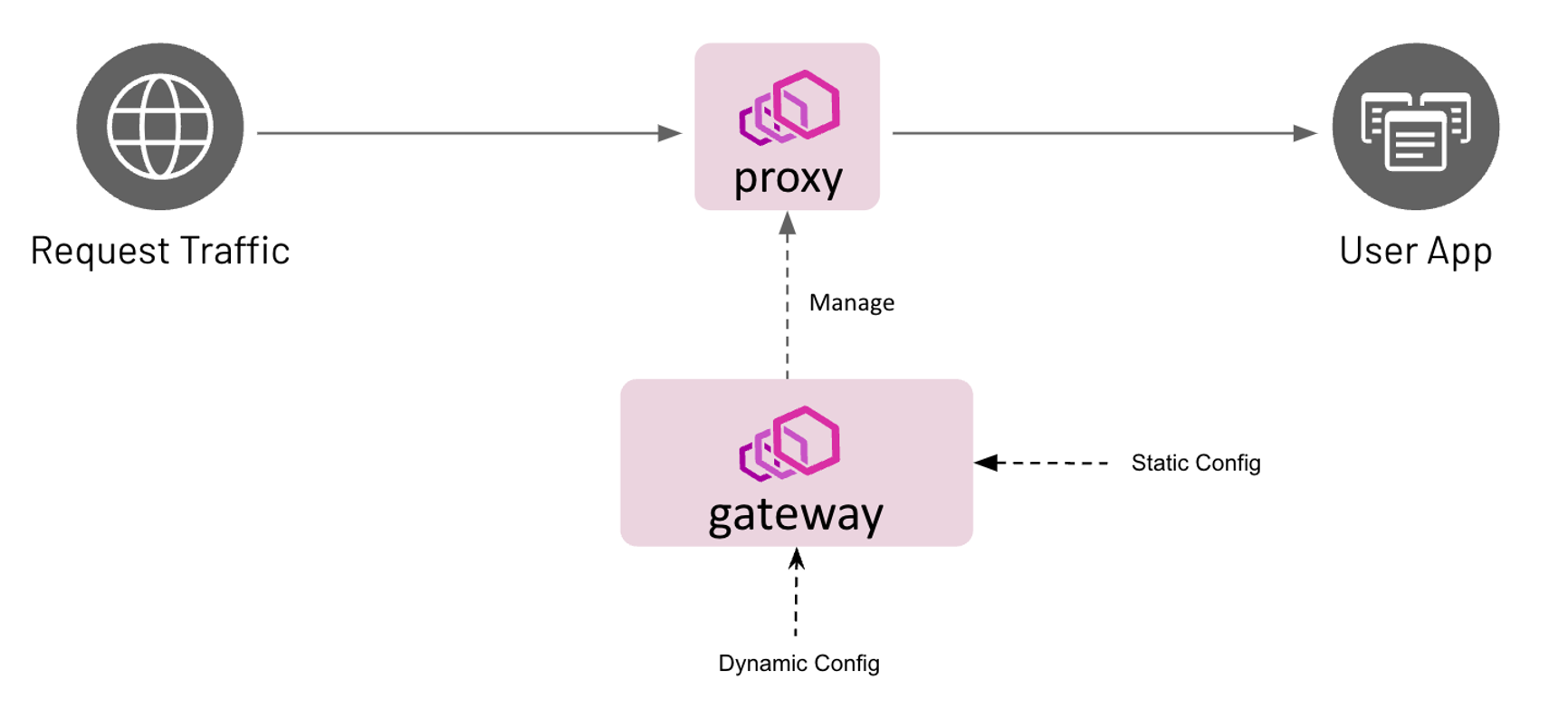

- Control Plane- A collection of inter-related software components for providing application gateway and routing

functionality. The control plane is implemented by Envoy Gateway and provides services for managing the data plane.

These services are detailed in the components section.

- Data Plane- Provides intelligent application-level traffic routing and is implemented as one or more Envoy proxies.

Architecture

Configuration

Envoy Gateway is configured statically at startup and the managed data plane is configured dynamically through

Kubernetes resources, primarily Gateway API objects.

Static Configuration

Static configuration is used to configure Envoy Gateway at startup, i.e. change the GatewayClass controllerName,

configure a Provider, etc. Currently, Envoy Gateway only supports configuration through a configuration file. If the

configuration file is not provided, Envoy Gateway starts-up with default configuration parameters.

Dynamic Configuration

Dynamic configuration is based on the concept of a declaring the desired state of the data plane and using

reconciliation loops to drive the actual state toward the desired state. The desired state of the data plane is

defined as Kubernetes resources that provide the following services:

- Infrastructure Management- Manage the data plane infrastructure, i.e. deploy, upgrade, etc. This configuration is

expressed through GatewayClass and Gateway resources. The

EnvoyProxy Custom Resource can be

referenced by gatewayclass.spec.parametersRef to modify data plane infrastructure default parameters,

e.g. expose Envoy network endpoints using a NodePort service instead of a LoadBalancer service. - Traffic Routing- Define how to handle application-level requests to backend services. For example, route all HTTP

requests for “www.example.com” to a backend service running a web server. This configuration is expressed through

HTTPRoute and TLSRoute resources that match, filter, and route traffic to a backend.

Although a backend can be any valid Kubernetes Group/Kind resource, Envoy Gateway only supports a Service

reference.

Components

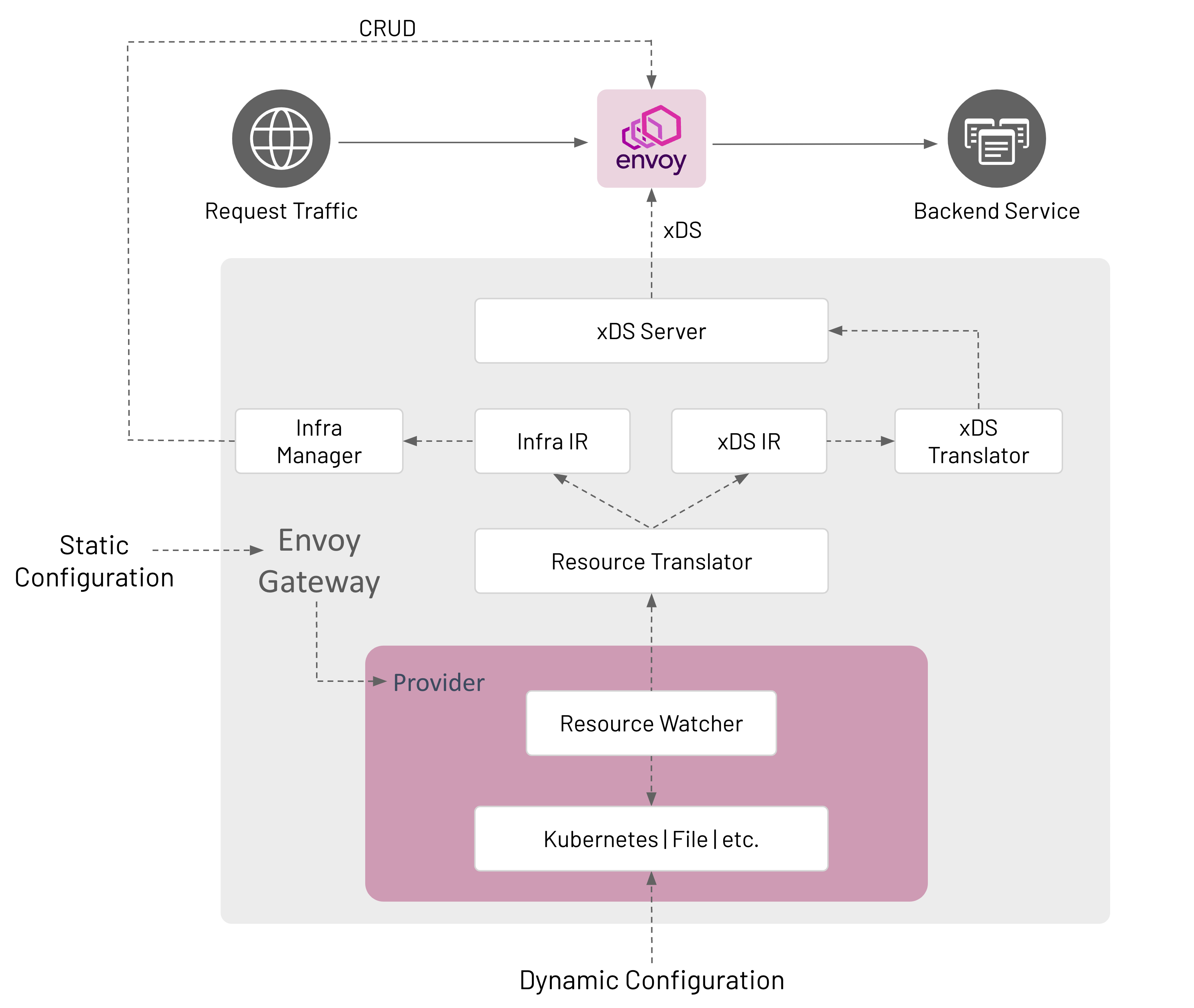

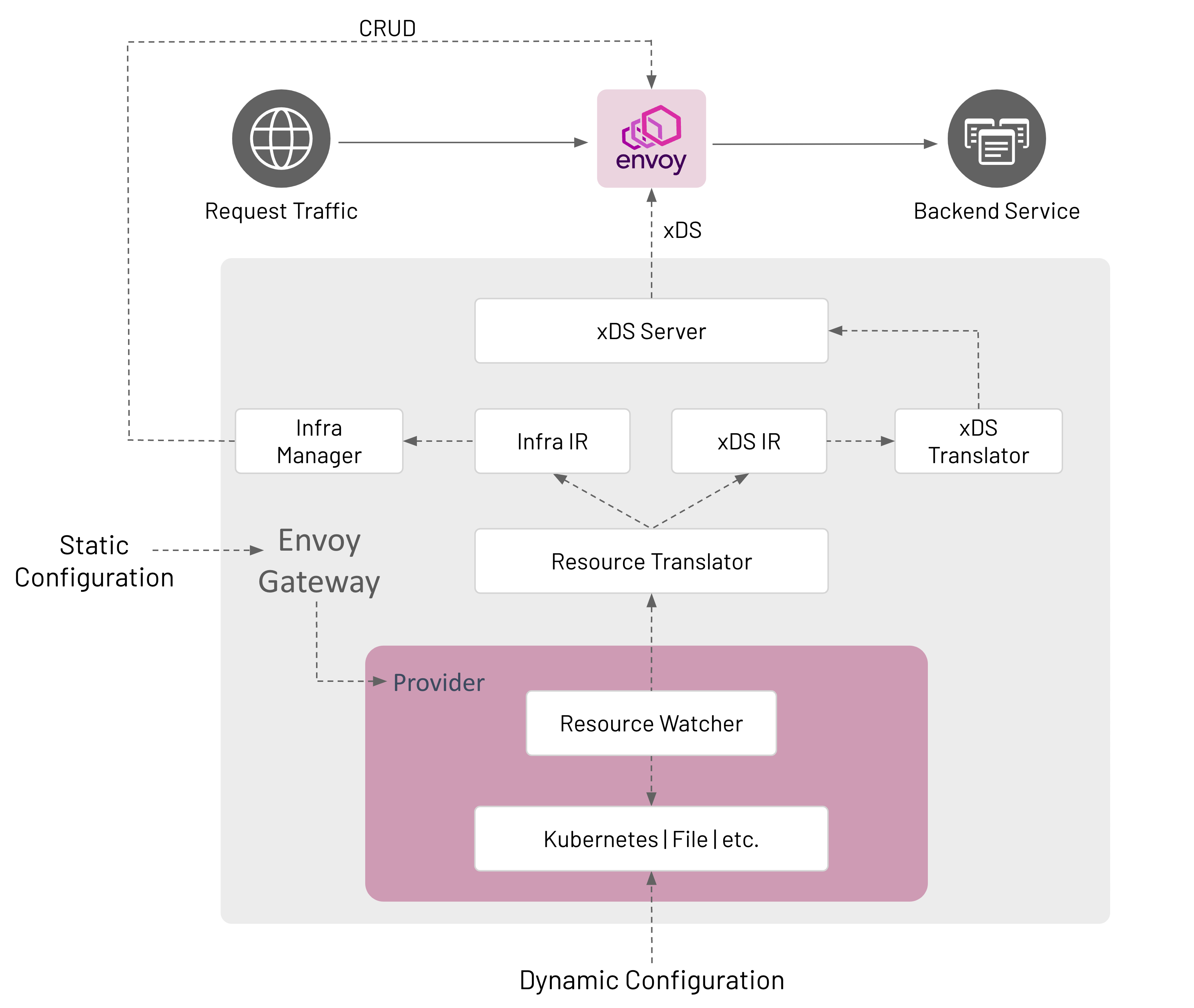

Envoy Gateway is made up of several components that communicate in-process; how this communication happens is described

in the Watching Components Design.

Provider

A Provider is an infrastructure component that Envoy Gateway calls to establish its runtime configuration, resolve

services, persist data, etc. As of v0.2, Kubernetes is the only implemented provider. A file provider is on the roadmap

via Issue #37. Other providers can be added in the future as Envoy Gateway use cases are better understood. A

provider is configured at start up through Envoy Gateway’s static configuration.

Kubernetes Provider

- Uses Kubernetes-style controllers to reconcile Kubernetes resources that comprise the

dynamic configuration.

- Manages the data plane through Kubernetes API CRUD operations.

- Uses Kubernetes for Service discovery.

- Uses etcd (via Kubernetes API) to persist data.

File Provider

- Uses a file watcher to watch files in a directory that define the data plane configuration.

- Manages the data plane by calling internal APIs, e.g.

CreateDataPlane(). - Uses the host’s DNS for Service discovery.

- If needed, the local filesystem is used to persist data.

Resource Watcher

The Resource Watcher watches resources used to establish and maintain Envoy Gateway’s dynamic configuration. The

mechanics for watching resources is provider-specific, e.g. informers, caches, etc. are used for the Kubernetes

provider. The Resource Watcher uses the configured provider for input and provides resources to the Resource Translator

as output.

Resource Translator

The Resource Translator translates external resources, e.g. GatewayClass, from the Resource Watcher to the Intermediate

Representation (IR). It is responsible for:

- Translating infrastructure-specific resources/fields from the Resource Watcher to the Infra IR.

- Translating proxy configuration resources/fields from the Resource Watcher to the xDS IR.

Note: The Resource Translator is implemented as the Translator API type in the gatewayapi package.

The Intermediate Representation defines internal data models that external resources are translated into. This allows

Envoy Gateway to be decoupled from the external resources used for dynamic configuration. The IR consists of an Infra IR

used as input for the Infra Manager and an xDS IR used as input for the xDS Translator.

- Infra IR- Used as the internal definition of the managed data plane infrastructure.

- xDS IR- Used as the internal definition of the managed data plane xDS configuration.

xDS Translator

The xDS Translator translates the xDS IR into xDS Resources that are consumed by the xDS server.

xDS Server

The xDS Server is a xDS gRPC Server based on Go Control Plane. Go Control Plane implements the Delta xDS Server

Protocol and is responsible for using xDS to configure the data plane.

Infra Manager

The Infra Manager is a provider-specific component responsible for managing the following infrastructure:

- Data Plane - Manages all the infrastructure required to run the managed Envoy proxies. For example, CRUD Deployment,

Service, etc. resources to run Envoy in a Kubernetes cluster.

- Auxiliary Control Planes - Optional infrastructure needed to implement application Gateway features that require

external integrations with the managed Envoy proxies. For example, Global Rate Limiting requires provisioning

and configuring the Envoy Rate Limit Service and the Rate Limit filter. Such features are exposed to

users through the Custom Route Filters extension.

The Infra Manager consumes the Infra IR as input to manage the data plane infrastructure.

Design Decisions

- Envoy Gateway consumes one GatewayClass by comparing its configured controller name with

spec.controllerName of a GatewayClass. If multiple GatewayClasses exist with the same spec.controllerName, Envoy

Gateway follows Gateway API guidelines to resolve the conflict.

gatewayclass.spec.parametersRef refers to the EnvoyProxy custom resource for configuring the managed proxy

infrastructure. If unspecified, default configuration parameters are used for the managed proxy infrastructure. - Envoy Gateway manages Gateways that reference its GatewayClass.

- A Gateway resource causes Envoy Gateway to provision managed Envoy proxy infrastructure.

- Envoy Gateway groups Listeners by Port and collapses each group of Listeners into a single Listener if the Listeners

in the group are compatible. Envoy Gateway considers Listeners to be compatible if all the following conditions are

met:

- Either each Listener within the group specifies the “HTTP” Protocol or each Listener within the group specifies

either the “HTTPS” or “TLS” Protocol.

- Each Listener within the group specifies a unique “Hostname”.

- As a special case, one Listener within a group may omit “Hostname”, in which case this Listener matches when no

other Listener matches.

- Envoy Gateway does not merge listeners across multiple Gateways.

- Envoy Gateway follows Gateway API guidelines to resolve any conflicts.

- A Gateway

listener corresponds to an Envoy proxy Listener.

- An HTTPRoute resource corresponds to an Envoy proxy Route.

- The goal is to make Envoy Gateway components extensible in the future. See the roadmap for additional details.

The draft for this document is here.

1.3 - Watching Components Design

Envoy Gateway is made up of several components that communicate in-process. Some of them (namely Providers) watch

external resources, and “publish” what they see for other components to consume; others watch what another publishes and

act on it (such as the resource translator watches what the providers publish, and then publishes its own results that

are watched by another component). Some of these internally published results are consumed by multiple components.

To facilitate this communication use the watchable library. The watchable.Map type is very similar to the

standard library’s sync.Map type, but supports a .Subscribe (and .SubscribeSubset) method that promotes a pub/sub

pattern.

Pub

Many of the things we communicate around are naturally named, either by a bare “name” string or by a “name”/“namespace”

tuple. And because watchable.Map is typed, it makes sense to have one map for each type of thing (very similar to if

we were using native Go maps). For example, a struct that might be written to by the Kubernetes provider, and read by

the IR translator:

type ResourceTable struct {

// gateway classes are cluster-scoped; no namespace

GatewayClasses watchable.Map[string, *gwapiv1b1.GatewayClass]

// gateways are namespace-scoped, so use a k8s.io/apimachinery/pkg/types.NamespacedName as the map key.

Gateways watchable.Map[types.NamespacedName, *gwapiv1b1.Gateway]

HTTPRoutes watchable.Map[types.NamespacedName, *gwapiv1b1.HTTPRoute]

}

The Kubernetes provider updates the table by calling table.Thing.Store(name, val) and table.Thing.Delete(name);

updating a map key with a value that is deep-equal (usually reflect.DeepEqual, but you can implement your own .Equal

method) the current value is a no-op; it won’t trigger an event for subscribers. This is handy so that the publisher

doesn’t have as much state to keep track of; it doesn’t need to know “did I already publish this thing”, it can just

.Store its data and watchable will do the right thing.

Sub

Meanwhile, the translator and other interested components subscribe to it with table.Thing.Subscribe (or

table.Thing.SubscribeSubset if they only care about a few “Thing"s). So the translator goroutine might look like:

func(ctx context.Context) error {

for snapshot := range k8sTable.HTTPRoutes.Subscribe(ctx) {

fullState := irInput{

GatewayClasses: k8sTable.GatewayClasses.LoadAll(),

Gateways: k8sTable.Gateways.LoadAll(),

HTTPRoutes: snapshot.State,

}

translate(irInput)

}

}

Or, to watch multiple maps in the same loop:

func worker(ctx context.Context) error {

classCh := k8sTable.GatewayClasses.Subscribe(ctx)

gwCh := k8sTable.Gateways.Subscribe(ctx)

routeCh := k8sTable.HTTPRoutes.Subscribe(ctx)

for ctx.Err() == nil {

var arg irInput

select {

case snapshot := <-classCh:

arg.GatewayClasses = snapshot.State

case snapshot := <-gwCh:

arg.Gateways = snapshot.State

case snapshot := <-routeCh:

arg.Routes = snapshot.State

}

if arg.GateWayClasses == nil {

arg.GatewayClasses = k8sTable.GateWayClasses.LoadAll()

}

if arg.GateWays == nil {

arg.Gateways = k8sTable.GateWays.LoadAll()

}

if arg.HTTPRoutes == nil {

arg.HTTPRoutes = k8sTable.HTTPRoutes.LoadAll()

}

translate(irInput)

}

}

From the updates it gets from .Subscribe, it can get a full view of the map being subscribed to via snapshot.State;

but it must read the other maps explicitly. Like sync.Map, watchable.Maps are thread-safe; while .Subscribe is a

handy way to know when to run, .Load and friends can be used without subscribing.

There can be any number of subscribers. For that matter, there can be any number of publishers .Storeing things, but

it’s probably wise to just have one publisher for each map.

The channel returned from .Subscribe is immediately readable with a snapshot of the map as it existed when

.Subscribe was called; and becomes readable again whenever .Store or .Delete mutates the map. If multiple

mutations happen between reads (or if mutations happen between .Subscribe and the first read), they are coalesced in

to one snapshot to be read; the snapshot.State is the most-recent full state, and snapshot.Updates is a listing of

each of the mutations that cause this snapshot to be different than the last-read one. This way subscribers don’t need

to worry about a backlog accumulating if they can’t keep up with the rate of changes from the publisher.

If the map contains anything before .Subscribe is called, that very first read won’t include snapshot.Updates

entries for those pre-existing items; if you are working with snapshot.Update instead of snapshot.State, then you

must add special handling for your first read. We have a utility function ./internal/message.HandleSubscription to

help with this.

Other Notes

The common pattern will likely be that the entrypoint that launches the goroutines for each component instantiates the

map, and passes them to the appropriate publishers and subscribers; same as if they were communicating via a dumb

chan.

A limitation of watchable.Map is that in order to ensure safety between goroutines, it does require that value types

be deep-copiable; either by having a DeepCopy method, being a proto.Message, or by containing no reference types and

so can be deep-copied by naive assignment. Fortunately, we’re using controller-gen anyway, and controller-gen can

generate DeepCopy methods for us: just stick a // +k8s:deepcopy-gen=true on the types that you want it to generate

methods for.

1.4 - Gateway API Translator Design

The Gateway API translates external resources, e.g. GatewayClass, from the configured Provider to the Intermediate

Representation (IR).

Assumptions

Initially target core conformance features only, to be followed by extended conformance features.

The main inputs to the Gateway API translator are:

- GatewayClass, Gateway, HTTPRoute, TLSRoute, Service, ReferenceGrant, Namespace, and Secret resources.

Note: ReferenceGrant is not fully implemented as of v0.2.

The outputs of the Gateway API translator are:

- Xds and Infra Internal Representations (IRs).

- Status updates for GatewayClass, Gateways, HTTPRoutes

Listener Compatibility

Envoy Gateway follows Gateway API listener compatibility spec:

Each listener in a Gateway must have a unique combination of Hostname, Port, and Protocol. An implementation MAY group

Listeners by Port and then collapse each group of Listeners into a single Listener if the implementation determines

that the Listeners in the group are “compatible”.

Note: Envoy Gateway does not collapse listeners across multiple Gateways.

Listener Compatibility Examples

Example 1: Gateway with compatible Listeners (same port & protocol, different hostnames)

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: gateway-1

namespace: envoy-gateway

spec:

gatewayClassName: envoy-gateway

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

hostname: "*.envoygateway.io"

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

hostname: whales.envoygateway.io

Example 2: Gateway with compatible Listeners (same port & protocol, one hostname specified, one not)

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: gateway-1

namespace: envoy-gateway

spec:

gatewayClassName: envoy-gateway

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

hostname: "*.envoygateway.io"

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

Example 3: Gateway with incompatible Listeners (same port, protocol and hostname)

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: gateway-1

namespace: envoy-gateway

spec:

gatewayClassName: envoy-gateway

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

hostname: whales.envoygateway.io

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

hostname: whales.envoygateway.io

Example 4: Gateway with incompatible Listeners (neither specify a hostname)

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: gateway-1

namespace: envoy-gateway

spec:

gatewayClassName: envoy-gateway

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

Computing Status

Gateway API specifies a rich set of status fields & conditions for each resource. To achieve conformance, Envoy Gateway

must compute the appropriate status fields and conditions for managed resources.

Status is computed and set for:

- The managed GatewayClass (

gatewayclass.status.conditions). - Each managed Gateway, based on its Listeners’ status (

gateway.status.conditions). For the Kubernetes provider, the

Envoy Deployment and Service status are also included to calculate Gateway status. - Listeners for each Gateway (

gateway.status.listeners). - The ParentRef for each Route (

route.status.parents).

The Gateway API translator is responsible for calculating status conditions while translating Gateway API resources to

the IR and publishing status over the message bus. The Status Manager subscribes to these status messages and

updates the resource status using the configured provider. For example, the Status Manager uses a Kubernetes client to

update resource status on the Kubernetes API server.

Outline

The following roughly outlines the translation process. Each step may produce (1) IR; and (2) status updates on Gateway

API resources.

Process Gateway Listeners

- Validate unique hostnames, ports, and protocols.

- Validate and compute supported kinds.

- Validate allowed namespaces (validate selector if specified).

- Validate TLS fields if specified, including resolving referenced Secrets.

Process HTTPRoutes

- foreach route rule:

- compute matches

- [core] path exact, path prefix

- [core] header exact

- [extended] query param exact

- [extended] HTTP method

- compute filters

- [core] request header modifier (set/add/remove)

- [core] request redirect (hostname, statuscode)

- [extended] request mirror

- compute backends

- [core] Kubernetes services

- foreach route parent ref:

- get matching listeners (check Gateway, section name, listener validation status, listener allowed routes, hostname intersection)

- foreach matching listener:

- foreach hostname intersection with route:

- add each computed route rule to host

Context Structs

To help store, access and manipulate information as it’s processed during the translation process, a set of context

structs are used. These structs wrap a given Gateway API type, and add additional fields and methods to support

processing.

GatewayContext wraps a Gateway and provides helper methods for setting conditions, accessing Listeners, etc.

type GatewayContext struct {

// The managed Gateway

*v1beta1.Gateway

// A list of Gateway ListenerContexts.

listeners []*ListenerContext

}

ListenerContext wraps a Listener and provides helper methods for setting conditions and other status information on

the associated Gateway.

type ListenerContext struct {

// The Gateway listener.

*v1beta1.Listener

// The Gateway this Listener belongs to.

gateway *v1beta1.Gateway

// An index used for managing this listener in the list of Gateway listeners.

listenerStatusIdx int

// Only Routes in namespaces selected by the selector may be attached

// to the Gateway this listener belongs to.

namespaceSelector labels.Selector

// The TLS Secret for this Listener, if applicable.

tlsSecret *v1.Secret

}

RouteContext represents a generic Route object (HTTPRoute, TLSRoute, etc.) that can reference Gateway objects.

type RouteContext interface {

client.Object

// GetRouteType returns the Kind of the Route object, HTTPRoute,

// TLSRoute, TCPRoute, UDPRoute etc.

GetRouteType() string

// GetHostnames returns the hosts targeted by the Route object.

GetHostnames() []string

// GetParentReferences returns the ParentReference of the Route object.

GetParentReferences() []v1beta1.ParentReference

// GetRouteParentContext returns RouteParentContext by using the Route

// objects' ParentReference.

GetRouteParentContext(forParentRef v1beta1.ParentReference) *RouteParentContext

}

1.5 - Configuration API Design

Motivation

Issue 51 specifies the need to design an API for configuring Envoy Gateway. The control plane is configured

statically at startup and the data plane is configured dynamically through Kubernetes resources, primarily

Gateway API objects. Refer to the Envoy Gateway design doc for additional details regarding

Envoy Gateway terminology and configuration.

Goals

- Define an initial API to configure Envoy Gateway at startup.

- Define an initial API for configuring the managed data plane, e.g. Envoy proxies.

Non-Goals

- Implementation of the configuration APIs.

- Define the

status subresource of the configuration APIs. - Define a complete set of APIs for configuring Envoy Gateway. As stated in the Goals, this document

defines the initial configuration APIs.

- Define an API for deploying/provisioning/operating Envoy Gateway. If needed, a future Envoy Gateway operator would be

responsible for designing and implementing this type of API.

- Specify tooling for managing the API, e.g. generate protos, CRDs, controller RBAC, etc.

Control Plane API

The EnvoyGateway API defines the control plane configuration, e.g. Envoy Gateway. Key points of this API are:

- It will define Envoy Gateway’s startup configuration file. If the file does not exist, Envoy Gateway will start up

with default configuration parameters.

- EnvoyGateway inlines the

TypeMeta API. This allows EnvoyGateway to be versioned and managed as a GroupVersionKind

scheme. - EnvoyGateway does not contain a metadata field since it’s currently represented as a static configuration file instead of

a Kubernetes resource.

- Since EnvoyGateway does not surface status, EnvoyGatewaySpec is inlined.

- If data plane static configuration is required in the future, Envoy Gateway will use a separate file for this purpose.

The v1alpha1 version and config.gateway.envoyproxy.io API group get generated:

// gateway/api/config/v1alpha1/doc.go

// Package v1alpha1 contains API Schema definitions for the config.gateway.envoyproxy.io API group.

//

// +groupName=config.gateway.envoyproxy.io

package v1alpha1

The initial EnvoyGateway API:

// gateway/api/config/v1alpha1/envoygateway.go

package valpha1

import (

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

// EnvoyGateway is the Schema for the envoygateways API

type EnvoyGateway struct {

metav1.TypeMeta `json:",inline"`

// EnvoyGatewaySpec defines the desired state of Envoy Gateway.

EnvoyGatewaySpec `json:",inline"`

}

// EnvoyGatewaySpec defines the desired state of Envoy Gateway configuration.

type EnvoyGatewaySpec struct {

// Gateway defines Gateway-API specific configuration. If unset, default

// configuration parameters will apply.

//

// +optional

Gateway *Gateway `json:"gateway,omitempty"`

// Provider defines the desired provider configuration. If unspecified,

// the Kubernetes provider is used with default parameters.

//

// +optional

Provider *Provider `json:"provider,omitempty"`

}

// Gateway defines desired Gateway API configuration of Envoy Gateway.

type Gateway struct {

// ControllerName defines the name of the Gateway API controller. If unspecified,

// defaults to "gateway.envoyproxy.io/gatewayclass-controller". See the following

// for additional details:

//

// https://gateway-api.sigs.k8s.io/v1alpha2/references/spec/#gateway.networking.k8s.io/v1alpha2.GatewayClass

//

// +optional

ControllerName string `json:"controllerName,omitempty"`

}

// Provider defines the desired configuration of a provider.

// +union

type Provider struct {

// Type is the type of provider to use. If unset, the Kubernetes provider is used.

//

// +unionDiscriminator

Type ProviderType `json:"type,omitempty"`

// Kubernetes defines the configuration of the Kubernetes provider. Kubernetes

// provides runtime configuration via the Kubernetes API.

//

// +optional

Kubernetes *KubernetesProvider `json:"kubernetes,omitempty"`

// File defines the configuration of the File provider. File provides runtime

// configuration defined by one or more files.

//

// +optional

File *FileProvider `json:"file,omitempty"`

}

// ProviderType defines the types of providers supported by Envoy Gateway.

type ProviderType string

const (

// KubernetesProviderType defines the "Kubernetes" provider.

KubernetesProviderType ProviderType = "Kubernetes"

// FileProviderType defines the "File" provider.

FileProviderType ProviderType = "File"

)

// KubernetesProvider defines configuration for the Kubernetes provider.

type KubernetesProvider struct {

// TODO: Add config as use cases are better understood.

}

// FileProvider defines configuration for the File provider.

type FileProvider struct {

// TODO: Add config as use cases are better understood.

}

Note: Provider-specific configuration is defined in the {$PROVIDER_NAME}Provider API.

Gateway

Gateway defines desired configuration of Gateway API controllers that reconcile and translate Gateway API

resources into the Intermediate Representation (IR). Refer to the Envoy Gateway design doc for additional

details.

Provider

Provider defines the desired configuration of an Envoy Gateway provider. A provider is an infrastructure component that

Envoy Gateway calls to establish its runtime configuration. Provider is a union type. Therefore, Envoy Gateway

can be configured with only one provider based on the type discriminator field. Refer to the Envoy Gateway

design doc for additional details.

Control Plane Configuration

The configuration file is defined by the EnvoyGateway API type. At startup, Envoy Gateway searches for the configuration

at “/etc/envoy-gateway/config.yaml”.

Start Envoy Gateway:

Since the configuration file does not exist, Envoy Gateway will start with default configuration parameters.

The Kubernetes provider can be configured explicitly using provider.kubernetes:

$ cat << EOF > /etc/envoy-gateway/config.yaml

apiVersion: config.gateway.envoyproxy.io/v1alpha1

kind: EnvoyGateway

provider:

type: Kubernetes

kubernetes: {}

EOF

This configuration will cause Envoy Gateway to use the Kubernetes provider with default configuration parameters.

The Kubernetes provider can be configured using the provider field. For example, the foo field can be set to “bar”:

$ cat << EOF > /etc/envoy-gateway/config.yaml

apiVersion: config.gateway.envoyproxy.io/v1alpha1

kind: EnvoyGateway

provider:

type: Kubernetes

kubernetes:

foo: bar

EOF

Note: The Provider API from the Kubernetes package is currently undefined and foo: bar is provided for

illustration purposes only.

The same API structure is followed for each supported provider. The following example causes Envoy Gateway to use the

File provider:

$ cat << EOF > /etc/envoy-gateway/config.yaml

apiVersion: config.gateway.envoyproxy.io/v1alpha1

kind: EnvoyGateway

provider:

type: File

file:

foo: bar

EOF

Note: The Provider API from the File package is currently undefined and foo: bar is provided for illustration

purposes only.

Gateway API-related configuration is expressed through the gateway field. If unspecified, Envoy Gateway will use

default configuration parameters for gateway. The following example causes the GatewayClass controller to

manage GatewayClasses with controllerName foo instead of the default gateway.envoyproxy.io/gatewayclass-controller:

$ cat << EOF > /etc/envoy-gateway/config.yaml

apiVersion: config.gateway.envoyproxy.io/v1alpha1

kind: EnvoyGateway

gateway:

controllerName: foo

With any of the above configuration examples, Envoy Gateway can be started without any additional arguments:

Data Plane API

The data plane is configured dynamically through Kubernetes resources, primarily Gateway API objects.

Optionally, the data plane infrastructure can be configured by referencing a custom resource (CR) through

spec.parametersRef of the managed GatewayClass. The EnvoyProxy API defines the data plane infrastructure

configuration and is represented as the CR referenced by the managed GatewayClass. Key points of this API are:

- If unreferenced by

gatewayclass.spec.parametersRef, default parameters will be used to configure the data plane

infrastructure, e.g. expose Envoy network endpoints using a LoadBalancer service. - Envoy Gateway will follow Gateway API recommendations regarding updates to the EnvoyProxy CR:

It is recommended that this resource be used as a template for Gateways. This means that a Gateway is based on the

state of the GatewayClass at the time it was created and changes to the GatewayClass or associated parameters are

not propagated down to existing Gateways.

The initial EnvoyProxy API:

// gateway/api/config/v1alpha1/envoyproxy.go

package v1alpha1

import (

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

// EnvoyProxy is the Schema for the envoyproxies API.

type EnvoyProxy struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec EnvoyProxySpec `json:"spec,omitempty"`

Status EnvoyProxyStatus `json:"status,omitempty"`

}

// EnvoyProxySpec defines the desired state of Envoy Proxy infrastructure

// configuration.

type EnvoyProxySpec struct {

// Undefined by this design spec.

}

// EnvoyProxyStatus defines the observed state of EnvoyProxy.

type EnvoyProxyStatus struct {

// Undefined by this design spec.

}

The EnvoyProxySpec and EnvoyProxyStatus fields will be defined in the future as proxy infrastructure configuration use

cases are better understood.

Data Plane Configuration

GatewayClass and Gateway resources define the data plane infrastructure. Note that all examples assume Envoy Gateway is

running with the Kubernetes provider.

apiVersion: gateway.networking.k8s.io/v1beta1

kind: GatewayClass

metadata:

name: example-class

spec:

controllerName: gateway.envoyproxy.io/gatewayclass-controller

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: Gateway

metadata:

name: example-gateway

spec:

gatewayClassName: example-class

listeners:

- name: http

protocol: HTTP

port: 80

Since the GatewayClass does not define spec.parametersRef, the data plane is provisioned using default configuration

parameters. The Envoy proxies will be configured with a http listener and a Kubernetes LoadBalancer service listening

on port 80.

The following example will configure the data plane to use a ClusterIP service instead of the default LoadBalancer

service:

apiVersion: gateway.networking.k8s.io/v1beta1

kind: GatewayClass

metadata:

name: example-class

spec:

controllerName: gateway.envoyproxy.io/gatewayclass-controller

parametersRef:

name: example-config

group: config.gateway.envoyproxy.io

kind: EnvoyProxy

---

apiVersion: gateway.networking.k8s.io/v1beta1

kind: Gateway

metadata:

name: example-gateway

spec:

gatewayClassName: example-class

listeners:

- name: http

protocol: HTTP

port: 80

---

apiVersion: config.gateway.envoyproxy.io/v1alpha1

kind: EnvoyProxy

metadata:

name: example-config

spec:

networkPublishing:

type: ClusterIPService

Note: The NetworkPublishing API is currently undefined and is provided here for illustration purposes only.

2 - User Guides

This section includes User Guides of Envoy Gateway.

2.1 - Quickstart

This guide will help you get started with Envoy Gateway in a few simple steps.

Prerequisites

A Kubernetes cluster.

Note: Refer to the Compatibility Matrix for supported Kubernetes versions.

Installation

Install the Gateway API CRDs and Envoy Gateway:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/v0.2.0/install.yaml

Wait for Envoy Gateway to become available:

kubectl wait --timeout=5m -n envoy-gateway-system deployment/envoy-gateway --for=condition=Available

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/v0.2.0/quickstart.yaml

Testing the Configuration

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:8080 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

External LoadBalancer Support

You can also test the same functionality by sending traffic to the External IP. To get the external IP of the

Envoy service, run:

export GATEWAY_HOST=$(kubectl get svc/${ENVOY_SERVICE} -n envoy-gateway-system -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

In certain environments, the load balancer may be exposed using a hostname, instead of an IP address. If so, replace

ip in the above command with hostname.

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST:8080/get

Clean-Up

Use the steps in this section to uninstall everything from the quickstart guide.

Delete the GatewayClass, Gateway, HTTPRoute and Example App:

kubectl delete -f https://github.com/envoyproxy/gateway/releases/download/v0.2.0/quickstart.yaml --ignore-not-found=true

Delete the Gateway API CRDs and Envoy Gateway:

kubectl delete -f https://github.com/envoyproxy/gateway/releases/download/v0.2.0/install.yaml --ignore-not-found=true

Next Steps

Checkout the Developer Guide to get involved in the project.

2.2 - HTTP Redirects

The HTTPRoute resource can issue redirects to clients or rewrite paths sent upstream using filters. Note that

HTTPRoute rules cannot use both filter types at once. Currently, Envoy Gateway only supports core

HTTPRoute filters which consist of RequestRedirect and RequestHeaderModifier at the time of this writing. To

learn more about HTTP routing, refer to the Gateway API documentation.

Prerequisites

Follow the steps from the Secure Gateways to install Envoy Gateway and the example manifest.

Before proceeding, you should be able to query the example backend using HTTPS.

Redirects

Redirects return HTTP 3XX responses to a client, instructing it to retrieve a different resource. A

RequestRedirect filter instructs Gateways to emit a redirect response to requests that match the rule.

For example, to issue a permanent redirect (301) from HTTP to HTTPS, configure requestRedirect.statusCode=301 and

requestRedirect.scheme="https":

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-to-https-filter-redirect

spec:

parentRefs:

- name: eg

hostnames:

- redirect.example

rules:

- filters:

- type: RequestRedirect

requestRedirect:

scheme: https

statusCode: 301

hostname: www.example.com

port: 8443

backendRefs:

- name: backend

port: 3000

EOF

Note: 301 (default) and 302 are the only supported statusCodes.

The HTTPRoute status should indicate that it has been accepted and is bound to the example Gateway.

kubectl get httproute/http-to-https-filter-redirect -o yaml

Get the Gateway’s address:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Querying redirect.example/get should result in a 301 response from the example Gateway and redirecting to the

configured redirect hostname.

$ curl -L -vvv --header "Host: redirect.example" "http://${GATEWAY_HOST}:8080/get"

...

< HTTP/1.1 301 Moved Permanently

< location: https://www.example.com:8443/get

...

If you followed the steps in the Secure Gateways guide, you should be able to curl the redirect

location.

Path Redirects

Path redirects use an HTTP Path Modifier to replace either entire paths or path prefixes. For example, the HTTPRoute

below will issue a 302 redirect to all path.redirect.example requests whose path begins with /get to /status/200.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-filter-path-redirect

spec:

parentRefs:

- name: eg

hostnames:

- path.redirect.example

rules:

- matches:

- path:

type: PathPrefix

value: /get

filters:

- type: RequestRedirect

requestRedirect:

path:

type: ReplaceFullPath

replaceFullPath: /status/200

statusCode: 302

backendRefs:

- name: backend

port: 3000

EOF

The HTTPRoute status should indicate that it has been accepted and is bound to the example Gateway.

kubectl get httproute/http-filter-path-redirect -o yaml

Querying path.redirect.example should result in a 302 response from the example Gateway and a redirect location

containing the configured redirect path.

Query the path.redirect.example host:

curl -vvv --header "Host: path.redirect.example" "http://${GATEWAY_HOST}:8080/get"

You should receive a 302 with a redirect location of http://path.redirect.example/status/200.

2.3 - HTTP Request Headers

The HTTPRoute resource can modify the headers of a request before forwarding it to the upstream service. HTTPRoute

rules cannot use both filter types at once. Currently, Envoy Gateway only supports core HTTPRoute filters which

consist of RequestRedirect and RequestHeaderModifier at the time of this writing. To learn more about HTTP routing,

refer to the Gateway API documentation.

A RequestHeaderModifier filter instructs Gateways to modify the headers in requests that match the rule

before forwarding the request upstream. Note that the RequestHeaderModifier filter will only modify headers before the

request is sent from Envoy to the upstream service and will not affect response headers returned to the downstream

client.

Prerequisites

Follow the steps from the Quickstart Guide to install Envoy Gateway and the example manifest.

Before proceeding, you should be able to query the example backend using HTTP.

The RequestHeaderModifier filter can add new headers to a request before it is sent to the upstream. If the request

does not have the header configured by the filter, then that header will be added to the request. If the request already

has the header configured by the filter, then the value of the header in the filter will be appended to the value of the

header in the request.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- headers.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

weight: 1

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

add:

- name: "add-header"

value: "foo"

EOF

The HTTPRoute status should indicate that it has been accepted and is bound to the example Gateway.

kubectl get httproute/http-headers -o yaml

Get the Gateway’s address:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Querying headers.example/get should result in a 200 response from the example Gateway and the output from the

example app should indicate that the upstream example app received the header add-header with the value:

something,foo

$ curl -vvv --header "Host: headers.example" "http://${GATEWAY_HOST}:8080/get" --header "add-header: something"

...

> GET /get HTTP/1.1

> Host: headers.example

> User-Agent: curl/7.81.0

> Accept: */*

> add-header: something

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< content-length: 474

< x-envoy-upstream-service-time: 0

< server: envoy

<

...

"headers": {

"Accept": [

"*/*"

],

"Add-Header": [

"something",

"foo"

],

...

Setting headers is similar to adding headers. If the request does not have the header configured by the filter, then it

will be added, but unlike adding request headers which will append the value of the header if

the request already contains it, setting a header will cause the value to be replaced by the value configured in the

filter.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- headers.example

rules:

- backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

weight: 1

matches:

- path:

type: PathPrefix

value: /

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

set:

- name: "set-header"

value: "foo"

EOF

Querying headers.example/get should result in a 200 response from the example Gateway and the output from the

example app should indicate that the upstream example app received the header add-header with the original value

something replaced by foo.

$ curl -vvv --header "Host: headers.example" "http://${GATEWAY_HOST}:8080/get" --header "set-header: something"

...

> GET /get HTTP/1.1

> Host: headers.example

> User-Agent: curl/7.81.0

> Accept: */*

> add-header: something

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< content-length: 474

< x-envoy-upstream-service-time: 0

< server: envoy

<

"headers": {

"Accept": [

"*/*"

],

"Set-Header": [

"foo"

],

...

Headers can be removed from a request by simply supplying a list of header names.

Setting headers is similar to adding headers. If the request does not have the header configured by the filter, then it

will be added, but unlike adding request headers which will append the value of the header if

the request already contains it, setting a header will cause the value to be replaced by the value configured in the

filter.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- headers.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

name: backend

port: 3000

weight: 1

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

remove:

- "remove-header"

EOF

Querying headers.example/get should result in a 200 response from the example Gateway and the output from the

example app should indicate that the upstream example app received the header add-header, but the header

remove-header that was sent by curl was removed before the upstream received the request.

$ curl -vvv --header "Host: headers.example" "http://${GATEWAY_HOST}:8080/get" --header "add-header: something" --header "remove-header: foo"

...

> GET /get HTTP/1.1

> Host: headers.example

> User-Agent: curl/7.81.0

> Accept: */*

> add-header: something

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< content-length: 474

< x-envoy-upstream-service-time: 0

< server: envoy

<

"headers": {

"Accept": [

"*/*"

],

"Add-Header": [

"something"

],

...

Combining Filters

Headers can be added/set/removed in a single filter on the same HTTPRoute and they will all perform as expected

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- headers.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

weight: 1

filters:

- type: RequestHeaderModifier

requestHeaderModifier:

add:

- name: "add-header-1"

value: "foo"

set:

- name: "set-header-1"

value: "bar"

remove:

- "removed-header"

EOF

2.4 - HTTP Routing

The HTTPRoute resource allows users to configure HTTP routing by matching HTTP traffic and forwarding it to

Kubernetes backends. Currently, the only supported backend supported by Envoy Gateway is a Service resource. This guide

shows how to route traffic based on host, header, and path fields and forward the traffic to different Kubernetes

Services. To learn more about HTTP routing, refer to the Gateway API documentation.

Prerequisites

Install Envoy Gateway:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/v0.2.0/install.yaml

Wait for Envoy Gateway to become available:

kubectl wait --timeout=5m -n envoy-gateway-system deployment/envoy-gateway --for=condition=Available

Installation

Install the HTTP routing example resources:

kubectl apply -f https://raw.githubusercontent.com/envoyproxy/gateway/v0.2.0/examples/kubernetes/http-routing.yaml

The manifest installs a GatewayClass, Gateway, four Deployments, four Services, and three HTTPRoute resources.

The GatewayClass is a cluster-scoped resource that represents a class of Gateways that can be instantiated.

Note: Envoy Gateway is configured by default to manage a GatewayClass with

controllerName: gateway.envoyproxy.io/gatewayclass-controller.

Verification

Check the status of the GatewayClass:

kubectl get gc --selector=example=http-routing

The status should reflect “Accepted=True”, indicating Envoy Gateway is managing the GatewayClass.

A Gateway represents configuration of infrastructure. When a Gateway is created, Envoy proxy infrastructure is

provisioned or configured by Envoy Gateway. The gatewayClassName defines the name of a GatewayClass used by this

Gateway. Check the status of the Gateway:

kubectl get gateways --selector=example=http-routing

The status should reflect “Ready=True”, indicating the Envoy proxy infrastructure has been provisioned. The status also

provides the address of the Gateway. This address is used later in the guide to test connectivity to proxied backend

services.

The three HTTPRoute resources create routing rules on the Gateway. In order to receive traffic from a Gateway,

an HTTPRoute must be configured with parentRefs which reference the parent Gateway(s) that it should be attached to.

An HTTPRoute can match against a single set of hostnames. These hostnames are matched before any other matching

within the HTTPRoute takes place. Since example.com, foo.example.com, and bar.example.com are separate hosts with

different routing requirements, each is deployed as its own HTTPRoute - example-route, ``foo-route, and bar-route.

Check the status of the HTTPRoutes:

kubectl get httproutes --selector=example=http-routing -o yaml

The status for each HTTPRoute should surface “Accepted=True” and a parentRef that references the example Gateway.

The example-route matches any traffic for “example.com” and forwards it to the “example-svc” Service.

Testing the Configuration

Before testing HTTP routing to the example-svc backend, get the Gateway’s address.

export GATEWAY_HOST=$(kubectl get gateway/example-gateway -o jsonpath='{.status.addresses[0].value}')

Test HTTP routing to the example-svc backend.

curl -vvv --header "Host: example.com" "http://${GATEWAY_HOST}/"

A 200 status code should be returned and the body should include "pod": "example-backend-*" indicating the traffic

was routed to the example backend service. If you change the hostname to a hostname not represented in any of the

HTTPRoutes, e.g. “www.example.com”, the HTTP traffic will not be routed and a 404 should be returned.

The foo-route matches any traffic for foo.example.com and applies its routing rules to forward the traffic to the

“foo-svc” Service. Since there is only one path prefix match for /login, only foo.example.com/login/* traffic will

be forwarded. Test HTTP routing to the foo-svc backend.

curl -vvv --header "Host: foo.example.com" "http://${GATEWAY_HOST}/login"

A 200 status code should be returned and the body should include "pod": "foo-backend-*" indicating the traffic

was routed to the foo backend service. Traffic to any other paths that do not begin with /login will not be matched by

this HTTPRoute. Test this by removing /login from the request.

curl -vvv --header "Host: foo.example.com" "http://${GATEWAY_HOST}/"

The HTTP traffic will not be routed and a 404 should be returned.

Similarly, the bar-route HTTPRoute matches traffic for bar.example.com. All traffic for this hostname will be

evaluated against the routing rules. The most specific match will take precedence which means that any traffic with the

env:canary header will be forwarded to bar-svc-canary and if the header is missing or not canary then it’ll be

forwarded to bar-svc. Test HTTP routing to the bar-svc backend.

curl -vvv --header "Host: bar.example.com" "http://${GATEWAY_HOST}/"

A 200 status code should be returned and the body should include "pod": "bar-backend-*" indicating the traffic

was routed to the foo backend service.

Test HTTP routing to the bar-canary-svc backend by adding the env: canary header to the request.

curl -vvv --header "Host: bar.example.com" --header "env: canary" "http://${GATEWAY_HOST}/"

A 200 status code should be returned and the body should include "pod": "bar-canary-backend-*" indicating the

traffic was routed to the foo backend service.

2.5 - HTTPRoute Traffic Splitting

The HTTPRoute resource allows one or more backendRefs to be provided. Requests will be routed to these upstreams

if they match the rules of the HTTPRoute. If an invalid backendRef is configured, then HTTP responses will be returned

with status code 500 for all requests that would have been sent to that backend.

Installation

Follow the steps from the Quickstart Guide to install Envoy Gateway and the example manifest.

Before proceeding, you should be able to query the example backend using HTTP.

Single backendRef

When a single backendRef is configured in a HTTPRoute, it will receive 100% of the traffic.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- backends.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

EOF

The HTTPRoute status should indicate that it has been accepted and is bound to the example Gateway.

kubectl get httproute/http-headers -o yaml

Get the Gateway’s address:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Querying backends.example/get should result in a 200 response from the example Gateway and the output from the

example app should indicate which pod handled the request. There is only one pod in the deployment for the example app

from the quickstart, so it will be the same on all subsequent requests.

$ curl -vvv --header "Host: backends.example" "http://${GATEWAY_HOST}:8080/get"

...

> GET /get HTTP/1.1

> Host: backends.example

> User-Agent: curl/7.81.0

> Accept: */*

> add-header: something

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< content-length: 474

< x-envoy-upstream-service-time: 0

< server: envoy

<

...

"namespace": "default",

"ingress": "",

"service": "",

"pod": "backend-79665566f5-s589f"

...

Multiple backendRefs

If multiple backendRefs are configured, then traffic will be split between the backendRefs equally unless a weight is

configured.

First, create a second instance of the example app from the quickstart:

cat <<EOF | kubectl apply -f -

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: backend-2

---

apiVersion: v1

kind: Service

metadata:

name: backend-2

labels:

app: backend-2

service: backend-2

spec:

ports:

- name: http

port: 3000

targetPort: 3000

selector:

app: backend-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-2

spec:

replicas: 1

selector:

matchLabels:

app: backend-2

version: v1

template:

metadata:

labels:

app: backend-2

version: v1

spec:

serviceAccountName: backend-2

containers:

- image: gcr.io/k8s-staging-ingressconformance/echoserver:v20221109-7ee2f3e

imagePullPolicy: IfNotPresent

name: backend-2

ports:

- containerPort: 3000

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

EOF

Then create an HTTPRoute that uses both the app from the quickstart and the second instance that was just created

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- backends.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

- group: ""

kind: Service

name: backend-2

port: 3000

EOF

Querying backends.example/get should result in 200 responses from the example Gateway and the output from the

example app that indicates which pod handled the request should switch between the first pod and the second one from the

new deployment on subsequent requests.

$ curl -vvv --header "Host: backends.example" "http://${GATEWAY_HOST}:8080/get"

...

> GET /get HTTP/1.1

> Host: backends.example

> User-Agent: curl/7.81.0

> Accept: */*

> add-header: something

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< content-length: 474

< x-envoy-upstream-service-time: 0

< server: envoy

<

...

"namespace": "default",

"ingress": "",

"service": "",

"pod": "backend-75bcd4c969-lsxpz"

...

Weighted backendRefs

If multiple backendRefs are configured and an un-even traffic split between the backends is desired, then the weight

field can be used to control the weight of requests to each backend. If weight is not configured for a backendRef it is

assumed to be 1.

The weight field in a backendRef controls the distribution of the traffic split. The proportion of

requests to a single backendRef is calculated by dividing its weight by the sum of all backendRef weights in the

HTTPRoute. The weight is not a percentage and the sum of all weights does not need to add up to 100.

The HTTPRoute below will configure the gateway to send 80% of the traffic to the backend service, and 20% to the

backend-2 service.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- backends.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

weight: 8

- group: ""

kind: Service

name: backend-2

port: 3000

weight: 2

EOF

Invalid backendRefs

backendRefs can be considered invalid for the following reasons:

- The

group field is configured to something other than "". Currently, only the core API group (specified by

omitting the group field or setting it to an empty string) is supported - The

kind field is configured to anything other than Service. Envoy Gateway currently only supports Kubernetes

Service backendRefs - The backendRef configures a service with a

namespace not permitted by any existing ReferenceGrants - The

port field is not configured or is configured to a port that does not exist on the Service - The named Service configured by the backendRef cannot be found

Modifying the above example to make the backend-2 backendRef invalid by using a port that does not exist on the Service

will result in 80% of the traffic being sent to the backend service, and 20% of the traffic receiving an HTTP response

with status code 500.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: HTTPRoute

metadata:

name: http-headers

spec:

parentRefs:

- name: eg

hostnames:

- backends.example

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- group: ""

kind: Service

name: backend

port: 3000

weight: 8

- group: ""

kind: Service

name: backend-2

port: 9000

weight: 2

EOF

Querying backends.example/get should result in 200 responses 80% of the time, and 500 responses 20% of the time.

$ curl -vvv --header "Host: backends.example" "http://${GATEWAY_HOST}:8080/get"

> GET /get HTTP/1.1

> Host: backends.example

> User-Agent: curl/7.81.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 500 Internal Server Error

< server: envoy

< content-length: 0

<

2.6 - Secure Gateways

This guide will help you get started using secure Gateways. The guide uses a self-signed CA, so it should be used for

testing and demonstration purposes only.

Prerequisites

- OpenSSL to generate TLS assets.

Installation

Follow the steps from the Quickstart Guide to install Envoy Gateway and the example manifest.

Before proceeding, you should be able to query the example backend using HTTP.

TLS Certificates

Generate the certificates and keys used by the Gateway to terminate client TLS connections.

Create a root certificate and private key to sign certificates:

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -subj '/O=example Inc./CN=example.com' -keyout example.com.key -out example.com.crt

Create a certificate and a private key for www.example.com:

openssl req -out www.example.com.csr -newkey rsa:2048 -nodes -keyout www.example.com.key -subj "/CN=www.example.com/O=example organization"

openssl x509 -req -days 365 -CA example.com.crt -CAkey example.com.key -set_serial 0 -in www.example.com.csr -out www.example.com.crt

Store the cert/key in a Secret:

kubectl create secret tls example-cert --key=www.example.com.key --cert=www.example.com.crt

Update the Gateway from the Quickstart guide to include an HTTPS listener that listens on port 8443 and references the

example-cert Secret:

kubectl patch gateway eg --type=json --patch '[{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "https",

"protocol": "HTTPS",

"port": 8443,

"tls": {

"mode": "Terminate",

"certificateRefs": [{

"kind": "Secret",

"group": "",

"name": "example-cert",

}],

},

},

}]'

Verify the Gateway status:

kubectl get gateway/eg -o yaml

Testing

Clusters without External LoadBalancer Support

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8043:8443 &

Query the example app through Envoy proxy:

curl -v -HHost:www.example.com --resolve "www.example.com:8043:127.0.0.1" \

--cacert example.com.crt https://www.example.com:8043/get

Clusters with External LoadBalancer Support

Get the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Query the example app through the Gateway:

curl -v -HHost:www.example.com --resolve "www.example.com:8443:${GATEWAY_HOST}" \

--cacert example.com.crt https://www.example.com:8443/get

Multiple HTTPS Listeners

Create a TLS cert/key for the additional HTTPS listener:

openssl req -out foo.example.com.csr -newkey rsa:2048 -nodes -keyout foo.example.com.key -subj "/CN=foo.example.com/O=example organization"

openssl x509 -req -days 365 -CA example.com.crt -CAkey example.com.key -set_serial 0 -in foo.example.com.csr -out foo.example.com.crt

Store the cert/key in a Secret:

kubectl create secret tls foo-cert --key=foo.example.com.key --cert=foo.example.com.crt

Create another HTTPS listener on the example Gateway:

kubectl patch gateway eg --type=json --patch '[{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "https-foo",

"protocol": "HTTPS",

"port": 8443,

"hostname": "foo.example.com",

"tls": {

"mode": "Terminate",

"certificateRefs": [{

"kind": "Secret",

"group": "",

"name": "foo-cert",

}],

},

},

}]'

Update the HTTPRoute to route traffic for hostname foo.example.com to the example backend service:

kubectl patch httproute backend --type=json --patch '[{

"op": "add",

"path": "/spec/hostnames/-",

"value": "foo.example.com",

}]'

Verify the Gateway status:

kubectl get gateway/eg -o yaml

Follow the steps in the Testing section to test connectivity to the backend app through both Gateway

listeners. Replace www.example.com with foo.example.com to test the new HTTPS listener.

Cross Namespace Certificate References

A Gateway can be configured to reference a certificate in a different namespace. This is allowed by a ReferenceGrant

created in the target namespace. Without the ReferenceGrant, a cross-namespace reference is invalid.

Before proceeding, ensure you can query the HTTPS backend service from the Testing section.

To demonstrate cross namespace certificate references, create a ReferenceGrant that allows Gateways from the “default”

namespace to reference Secrets in the “envoy-gateway-system” namespace:

$ cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1alpha2

kind: ReferenceGrant

metadata:

name: example

namespace: envoy-gateway-system

spec:

from:

- group: gateway.networking.k8s.io

kind: Gateway

namespace: default

to:

- group: ""

kind: Secret

EOF

Delete the previously created Secret:

kubectl delete secret/example-cert

The Gateway HTTPS listener should now surface the Ready: False status condition and the example HTTPS backend should

no longer be reachable through the Gateway.

kubectl get gateway/eg -o yaml

Recreate the example Secret in the envoy-gateway-system namespace:

kubectl create secret tls example-cert -n envoy-gateway-system --key=www.example.com.key --cert=www.example.com.crt

Update the Gateway HTTPS listener with namespace: envoy-gateway-system, for example:

$ cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1beta1

kind: Gateway

metadata:

name: eg

spec:

gatewayClassName: eg

listeners:

- name: http

protocol: HTTP

port: 8080

- name: https

protocol: HTTPS

port: 8443

tls:

mode: Terminate

certificateRefs:

- kind: Secret

group: ""

name: example-cert

namespace: envoy-gateway-system

EOF

The Gateway HTTPS listener status should now surface the Ready: True condition and you should once again be able to

query the HTTPS backend through the Gateway.

Lastly, test connectivity using the above Testing section.

Clean-Up

Follow the steps from the Quickstart Guide to uninstall Envoy Gateway and the example manifest.

Delete the Secrets:

kubectl delete secret/example-cert

kubectl delete secret/foo-cert

Next Steps

Checkout the Developer Guide to get involved in the project.

2.7 - TLS Passthrough

This guide will walk through the steps required to configure TLS Passthrough via Envoy Gateway. Unlike configuring

Secure Gateways, where the Gateway terminates the client TLS connection, TLS Passthrough allows the application itself

to terminate the TLS connection, while the Gateway routes the requests to the application based on SNI headers.

Prerequisites

- OpenSSL to generate TLS assets.

Installation

Follow the steps from the Quickstart Guide to install Envoy Gateway and the example manifest.

Before proceeding, you should be able to query the example backend using HTTP.

TLS Certificates

Generate the certificates and keys used by the Service to terminate client TLS connections.

For the application, we’ll deploy a sample echoserver app, with the certificates loaded in the application Pod.

Note: These certificates will not be used by the Gateway, but will remain in the application scope.

Create a root certificate and private key to sign certificates:

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -subj '/O=example Inc./CN=example.com' -keyout example.com.key -out example.com.crt

Create a certificate and a private key for passthrough.example.com:

openssl req -out passthrough.example.com.csr -newkey rsa:2048 -nodes -keyout passthrough.example.com.key -subj "/CN=passthrough.example.com/O=some organization"

openssl x509 -req -sha256 -days 365 -CA example.com.crt -CAkey example.com.key -set_serial 0 -in passthrough.example.com.csr -out passthrough.example.com.crt

Store the cert/keys in A Secret:

kubectl create secret tls server-certs --key=passthrough.example.com.key --cert=passthrough.example.com.crt

Deployment

Deploy TLS Passthrough application Deployment, Service and TLSRoute:

kubectl apply -f https://raw.githubusercontent.com/envoyproxy/gateway/v0.2.0/examples/kubernetes/tls-passthrough.yaml

Patch the Gateway from the Quickstart guide to include a TLS listener that listens on port 6443 and is configured for

TLS mode Passthrough:

kubectl patch gateway eg --type=json --patch '[{

"op": "add",

"path": "/spec/listeners/-",

"value": {

"name": "tls",

"protocol": "TLS",

"hostname": "passthrough.example.com",

"tls": {"mode": "Passthrough"},

"port": 6443,

},

}]'

Testing

Clusters without External LoadBalancer Support

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 6043:6443 &

Curl the example app through Envoy proxy:

curl -v --resolve "passthrough.example.com:6043:127.0.0.1" https://passthrough.example.com:6043 \

--cacert passthrough.example.com.crt

Clusters with External LoadBalancer Support

You can also test the same functionality by sending traffic to the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Curl the example app through the Gateway, e.g. Envoy proxy:

curl -v -HHost:passthrough.example.com --resolve "passthrough.example.com:6443:${GATEWAY_HOST}" \

--cacert example.com.crt https://passthrough.example.com:6443/get

Clean-Up

Follow the steps from the Quickstart Guide to uninstall Envoy Gateway and the example manifest.

Delete the Secret:

kubectl delete secret/server-certs

Next Steps

Checkout the Developer Guide to get involved in the project.

3 - Get Involved

This section includes contents related to Contributions

3.1 - Roadmap

This section records the roadmap of Envoy Gateway.

This document serves as a high-level reference for Envoy Gateway users and contributors to understand the direction of

the project.

Contributing to the Roadmap

- To add a feature to the roadmap, create an issue or join a community meeting to discuss your use

case. If your feature is accepted, a maintainer will assign your issue to a release milestone and update

this document accordingly.

- To help with an existing roadmap item, comment on or assign yourself to the associated issue.