Envoy Gateway is an open source project for managing Envoy Proxy as a standalone or Kubernetes-based application gateway. Gateway API resources are used to dynamically provision and configure the managed Envoy Proxies.

This is the multi-page printable view of this section. Click here to print.

Envoy Gateway is an open source project for managing Envoy Proxy as a standalone or Kubernetes-based application gateway. Gateway API resources are used to dynamically provision and configure the managed Envoy Proxies.

Envoy Gateway is a Kubernetes-native API Gateway and reverse proxy control plane. It simplifies deploying and operating Envoy Proxy as a data plane by using the standard Gateway API and its own extensible APIs.

By combining Envoy’s performance and flexibility with Kubernetes-native configuration, Envoy Gateway helps platform teams expose and manage secure, observable, and scalable APIs with minimal operational overhead.

Traditionally, configuring Envoy Proxy required deep networking expertise and writing complex configuration files. Envoy Gateway removes that barrier by:

Envoy Gateway is designed to be simple for app developers, powerful for platform engineers, and production-ready for large-scale deployments.

The different layers of Envoy Gateway are the following:

| Layer | Description |

|---|---|

| User Configuration | Users define routing, security, and traffic policies using standard Kubernetes Gateway API resources, optionally extended with Envoy Gateway CRDs. |

| Envoy Gateway Controller | A control plane component that watches Gateway API and Envoy Gateway-specific resources, translates them, and produces configuration for Envoy Proxy. |

| Envoy Proxy(Data Plane) | A high-performance proxy that receives and handles live traffic according to the configuration generated by Envoy Gateway. |

Together, these layers create a system that’s:

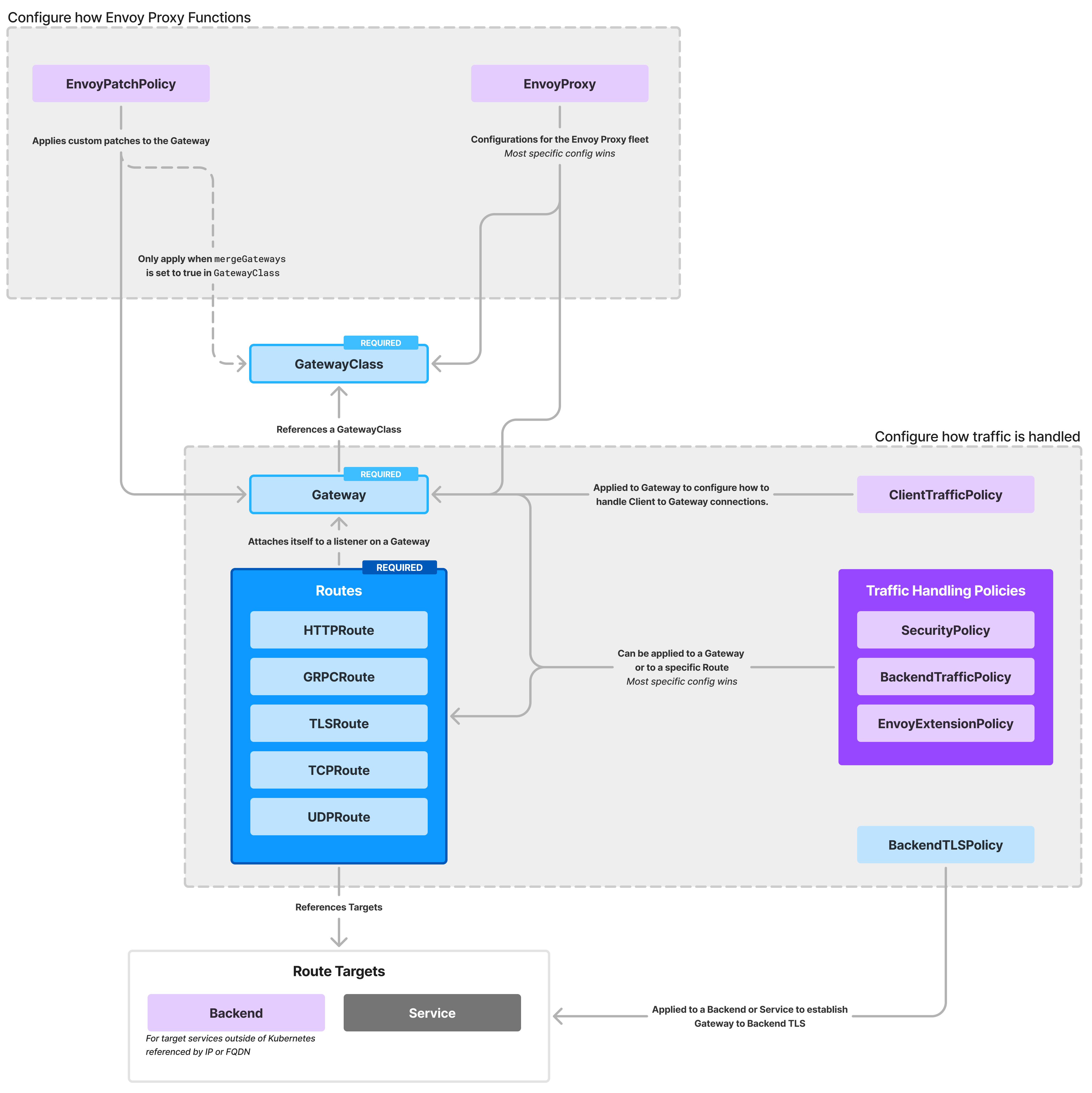

There are several resources that play a part in enabling you to meet your Kubernetes ingress traffic handling needs. This page provides a brief overview of the resources you’ll be working with.

| Resource | API | Required | Purpose | References | Description |

|---|---|---|---|---|---|

| GatewayClass | Gateway API | Yes | Gateway Config | Core | Defines a class of Gateways with common configuration. |

| Gateway | Gateway API | Yes | Gateway Config | GatewayClass | Specifies how traffic can enter the cluster. |

| HTTPRoute GRPCRoute TLSRoute TCPRoute UDPRoute | Gateway API | Yes | Routing | Gateway | Define routing rules for different types of traffic. Note:For simplicity these resources are referenced collectively as Route in the References column |

| Backend | EG API | No | Routing | N/A | Used for routing to cluster-external backends using FQDN or IP. Can also be used when you want to extend Envoy with external processes accessed via Unix Domain Sockets. |

| ClientTrafficPolicy | EG API | No | Traffic Handling | Gateway | Specifies policies for handling client traffic, including rate limiting, retries, and other client-specific configurations. |

| BackendTrafficPolicy | EG API | No | Traffic Handling | Gateway, Route | Specifies policies for traffic directed towards backend services, including load balancing, health checks, and failover strategies. Note:Most specific configuration wins |

| SecurityPolicy | EG API | No | Security | Gateway, Route | Defines security-related policies such as authentication, authorization, and encryption settings for traffic handled by Envoy Gateway. Note:Most specific configuration wins |

| BackendTLSPolicy | Gateway API | No | Security | Service | Defines TLS settings for backend connections, including certificate management, TLS version settings, and other security configurations. This policy is applied to Kubernetes Services. |

| EnvoyProxy | EG API | No | Customize & Extend | GatewayClass, Gateway | The EnvoyProxy resource represents the deployment and configuration of the Envoy proxy itself within a Kubernetes cluster, managing its lifecycle and settings. Note:Most specific configuration wins |

| EnvoyPatchPolicy | EG API | No | Customize & Extend | GatewayClass, Gateway | This policy defines custom patches to be applied to Envoy Gateway resources, allowing users to tailor the configuration to their specific needs. Note:Most specific configuration wins |

| EnvoyExtensionPolicy | EG API | No | Customize & Extend | Gateway, Route, Backend | Allows for the configuration of Envoy proxy extensions, enabling custom behavior and functionality. Note:Most specific configuration wins |

| HTTPRouteFilter | EG API | No | Customize & Extend | HTTPRoute | Allows for the additional request/response processing. |

For a deeper understanding of Envoy Gateway’s building blocks, you may also wish to explore these conceptual guides:

You may want to be familiar with:

The Gateway API is a Kubernetes API designed to provide a consistent, expressive, and extensible method for managing network traffic into and within a Kubernetes cluster, compared to the legacy Ingress API. It introduces core resources such as GatewayClass and Gateway and various route types like HTTPRoute and TLSRoute, which allow you to define how traffic is routed, secured, and exposed.

The Gateway API succeeds the Ingress API, which many Kubernetes users may already be familiar with. The Ingress API provided a mechanism for exposing HTTP(S) services to external traffic. The lack of advanced features like regex path matching led to custom annotations to compensate for these deficiencies. This non-standard approach led to fragmentation across Ingress Controllers, challenging portability.

Use The Gateway API to:

In essence, the Gateway API provides a standard interface. Envoy Gateway adds production-grade capabilities to that interface, bridging the gap between simplicity and power while keeping everything Kubernetes-native.

One of the Gateway API’s key strengths is that implementers can extend it. While providing a foundation for standard routing and traffic control needs, it enables implementations to introduce custom resources that address specific use cases.

Envoy Gateway leverages this model by introducing a suite of Gateway API extensions—implemented as Kubernetes Custom Resource Definitions (CRDs)—to expose powerful features from Envoy Proxy. These features include enhanced support for rate limiting, authentication, traffic shaping, and more. By utilizing these extensions, users can access production-grade functionality in a Kubernetes-native and declarative manner, without needing to write a low-level Envoy configuration.

Gateway API Extensions let you configure extra features that aren’t part of the standard Kubernetes Gateway API. These extensions are built by the teams that create and maintain Gateway API implementations. The Gateway API was designed to be extensible safe, and reliable. In the old Ingress API, people had to use custom annotations to add new features, but those weren’t type-safe, making it hard to check if their configuration was correct. With Gateway API Extensions, implementers provide type-safe Custom Resource Definitions (CRDs). This means every configuration you write has a clear structure and strict rules, making it easier to catch mistakes early and be confident your setup is valid.

Here are some examples of what kind of features extensions include:

The Envoy Gateway API introduces a set of Gateway API extensions that enable users to leverage the power of the Envoy proxy. Envoy Gateway uses a policy attachment model, where custom policies are applied to standard Gateway API resources (like HTTPRoute or Gateway) without modifying the core API. This approach provides separation of concerns and makes it easier to manage configurations across teams.

Backend,

BackendTrafficPolicy,

ClientTrafficPolicy,

EnvoyExtensionPolicy,

EnvoyGateway,

EnvoyPatchPolicy,

EnvoyProxy,

HTTPRouteFilter, and

SecurityPolicy,These extensions are processed through Envoy Gateway’s control plane, translating them into xDS configurations applied to Envoy Proxy instances. This layered architecture allows for consistent, scalable, and production-grade traffic control without needing to manage raw Envoy configuration directly.

BackendTrafficPolicy is an extension to the Kubernetes Gateway API that controls how Envoy Gateway communicates with your backend services. It can configure connection behavior, resilience mechanisms, and performance optimizations without requiring changes to your applications.

Think of it as a traffic controller between your gateway and backend services. It can detect problems, prevent failures from spreading, and optimize request handling to improve system stability.

BackendTrafficPolicy is particularly useful in scenarios where you need to:

Protect your services: Limit connections and reject excess traffic when necessary

Build resilient systems: Detect failing services and redirect traffic

Improve performance: Optimize how requests are distributed and responses are handled

Test system behavior: Inject faults and validate your recovery mechanisms

BackendTrafficPolicy is part of the Envoy Gateway API suite, which extends the Kubernetes Gateway API with additional capabilities. It’s implemented as a Custom Resource Definition (CRD) that you can use to configure how Envoy Gateway manages traffic to your backend services.

You can attach it to Gateway API resources in two ways:

targetRefs to directly reference specific Gateway resourcestargetSelectors to match Gateway resources based on labelsThe policy applies to all resources that match either targeting method. When multiple policies target the same resource, the most specific configuration wins.

For example, consider these two policies:

# Policy 1: Applies to all routes in the gateway

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: gateway-policy

spec:

targetRefs:

- kind: Gateway

name: my-gateway

circuitBreaker:

maxConnections: 100

---

# Policy 2: Applies to a specific route

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: route-policy

spec:

targetRefs:

- kind: HTTPRoute

name: my-route

circuitBreaker:

maxConnections: 50

In this example my-route and my-gateway would both affect the route. However, since Policy 2 targets the route directly while Policy 1 targets the gateway, Policy 2’s configuration (maxConnections: 50) will take precedence for that specific route.

Lastly, it’s important to note that even when you apply a policy to a Gateway, the policy’s effects are tracked separately for each backend service referenced in your routes. For example, if you set up circuit breaking on a Gateway with multiple backend services, each backend service will have its own independent circuit breaker counter. This ensures that issues with one backend service don’t affect the others.

BackendTrafficPolicy supports merging configurations using the mergeType field, which allows route-level policies to combine with gateway-level policies rather than completely overriding them. This enables layered policy strategies where platform teams can set baseline configurations at the Gateway level, while application teams can add specific policies for their routes.

Here’s an example demonstrating policy merging for rate limiting:

# Platform team: Gateway-level policy with global abuse prevention

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: global-backendtrafficpolicy

spec:

rateLimit:

type: Global

global:

rules:

- clientSelectors:

- sourceCIDR:

type: Distinct

value: 0.0.0.0/0

limit:

requests: 100

unit: Second

shared: true

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

---

# Application team: Route-level policy with specific limits

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: route-backendtrafficpolicy

spec:

mergeType: StrategicMerge # Enables merging with gateway policy

rateLimit:

type: Global

global:

rules:

- clientSelectors:

- sourceCIDR:

type: Distinct

value: 0.0.0.0/0

limit:

requests: 5

unit: Minute

shared: false

targetRefs:

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: signup-service-httproute

In this example, the route-level policy merges with the gateway-level policy, resulting in both rate limits being enforced: the global 100 requests/second abuse limit and the route-specific 5 requests/minute limit.

mergeType field can only be set on policies targeting child resources (like HTTPRoute), not parent resources (like Gateway)mergeType is unset, no merging occurs - only the most specific policy takes effectClientTrafficPolicy is an extension to the Kubernetes Gateway API that allows system administrators to configure how the Envoy Proxy server behaves with downstream clients. It is a policy attachment resource that can be applied to Gateway resources and holds settings for configuring the behavior of the connection between the downstream client and Envoy Proxy listener.

Think of ClientTrafficPolicy as a set of rules for your Gateway’s entry points, it lets you configure specific behaviors for each listener in your Gateway, with more specific rules taking precedence over general ones.

ClientTrafficPolicy is particularly useful in scenarios where you need to:

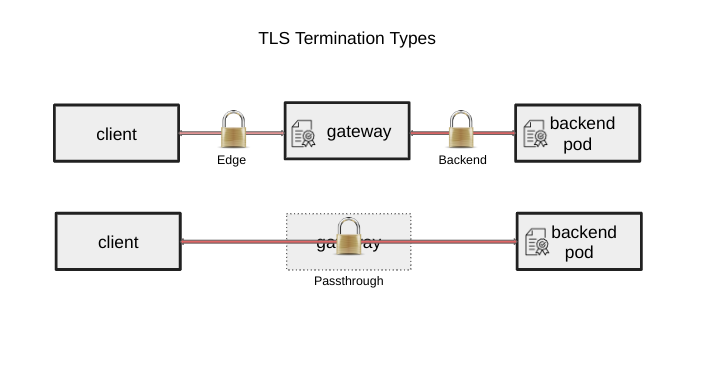

Enforce TLS Security Configure TLS termination, mutual TLS (mTLS), and certificate validation at the edge.

Manage Client Connections Control TCP keepalive behavior and connection timeouts for optimal resource usage.

Handle Client Identity Configure trusted proxy chains to correctly resolve client IPs for logging and access control.

Normalize Request Paths Sanitize incoming request paths to ensure compatibility with backend routing rules.

Tune HTTP Protocols Configure HTTP/1, HTTP/2, and HTTP/3 settings for compatibility and performance.

Monitor Listener Health Set up health checks for integration with load balancers and failover mechanisms.

ClientTrafficPolicy is part of the Envoy Gateway API suite, which extends the Kubernetes Gateway API with additional capabilities. It’s implemented as a Custom Resource Definition (CRD) that you can use to configure how Envoy Gateway manages incoming client traffic.

You can attach it to Gateway API resources in two ways:

targetRefs to directly reference specific Gateway resourcestargetSelectors to match Gateway resources based on labelsThe policy applies to all Gateway resources that match either targeting method. When multiple policies target the same resource, the most specific configuration wins.

For example, consider these policies targeting the same Gateway Listener:

# Policy A: Targets a specific listener in the gateway

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: listener-specific-policy

spec:

targetRefs:

- kind: Gateway

name: my-gateway

sectionName: https-listener # Targets specific listener

timeout:

http:

idleTimeout: 30s

---

# Policy B: Targets the entire gateway

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: gateway-wide-policy

spec:

targetRefs:

- kind: Gateway

name: my-gateway # Targets all listeners

timeout:

http:

idleTimeout: 60s

In this case:

targetRef.SectionNameSecurityPolicy is an Envoy Gateway extension to the Kubernetes Gateway API that allows you to define authentication and authorization requirements for traffic entering your gateway. It acts as a security layer that only properly authenticated and authorized requests are allowed through your backend services.

SecurityPolicy is designed for you to enforce access controls through configuration at the edge of your infrastructure in a declarative, Kubernetes-native way, without needing to configure complex proxy rules manually.

Authentication Methods:

Authorization Controls:

Cross-Origin Security:

SecurityPolicy is implemented as a Kubernetes Custom Resource Definition (CRD) and follows the policy attachment model. You can attach it to Gateway API resources in two ways:

targetRefs to directly reference specific Gateway resourcestargetSelectors to match Gateway resources based on labelsThe policy applies to all resources that match either targeting method. When multiple policies target the same resource, the most specific configuration wins.

For example, consider these policies targeting the same Gateway Listener:

# Policy A: Applies to a specific listener

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: SecurityPolicy

metadata:

name: listener-policy

namespace: default

spec:

targetRefs:

- kind: Gateway

name: my-gateway

sectionName: https # Applies only to "https" listener

cors:

allowOrigins:

- exact: https://example.com

---

# Policy B: Applies to the entire gateway

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: SecurityPolicy

metadata:

name: gateway-policy

namespace: default

spec:

targetRefs:

- kind: Gateway

name: my-gateway # Applies to all listeners

cors:

allowOrigins:

- exact: https://default.com

In the example, policy A affects only the HTTPS listener, while policy B applies to the rest of the listeners in the gateway. Since Policy A is more specific, the system will show Overridden=True for Policy B on the https listener.

An API gateway is a centralized entry point for managing, securing, and routing requests to backend services. It handles cross-cutting concerns, like authentication, rate limiting, and protocol translation, so individual services don’t have to. Decoupling clients from internal systems simplifies scaling, enforces consistency, and reduces redundancy.

Use an API Gateway to:

Under the hood, Envoy Proxy is a powerful, production-grade proxy that supports many of the capabilities you’d expect from an API Gateway, like traffic routing, retries, TLS termination, observability, and more. However, configuring Envoy directly can be complex and verbose.

Envoy Gateway makes configuring Envoy Proxy simple by implementing and extending the Kubernetes-native Gateway API. You define high-level traffic rules using resources like Gateway, HTTPRoute, or TLSRoute, and Envoy Gateway automatically translates them into detailed Envoy Proxy configurations.

A proxy server is an intermediary between a client (like a web browser) and another server (like an API server). When the client makes a request, the proxy forwards it to the destination server, receives the response, and then sends it back to the client.

Proxies are used to enhance security, manage traffic, anonymize user activity, or optimize performance through caching and load balancing features. In cloud environments, they often handle critical tasks such as request routing, TLS termination, authentication, and traffic shaping.

Use Envoy Proxy to:

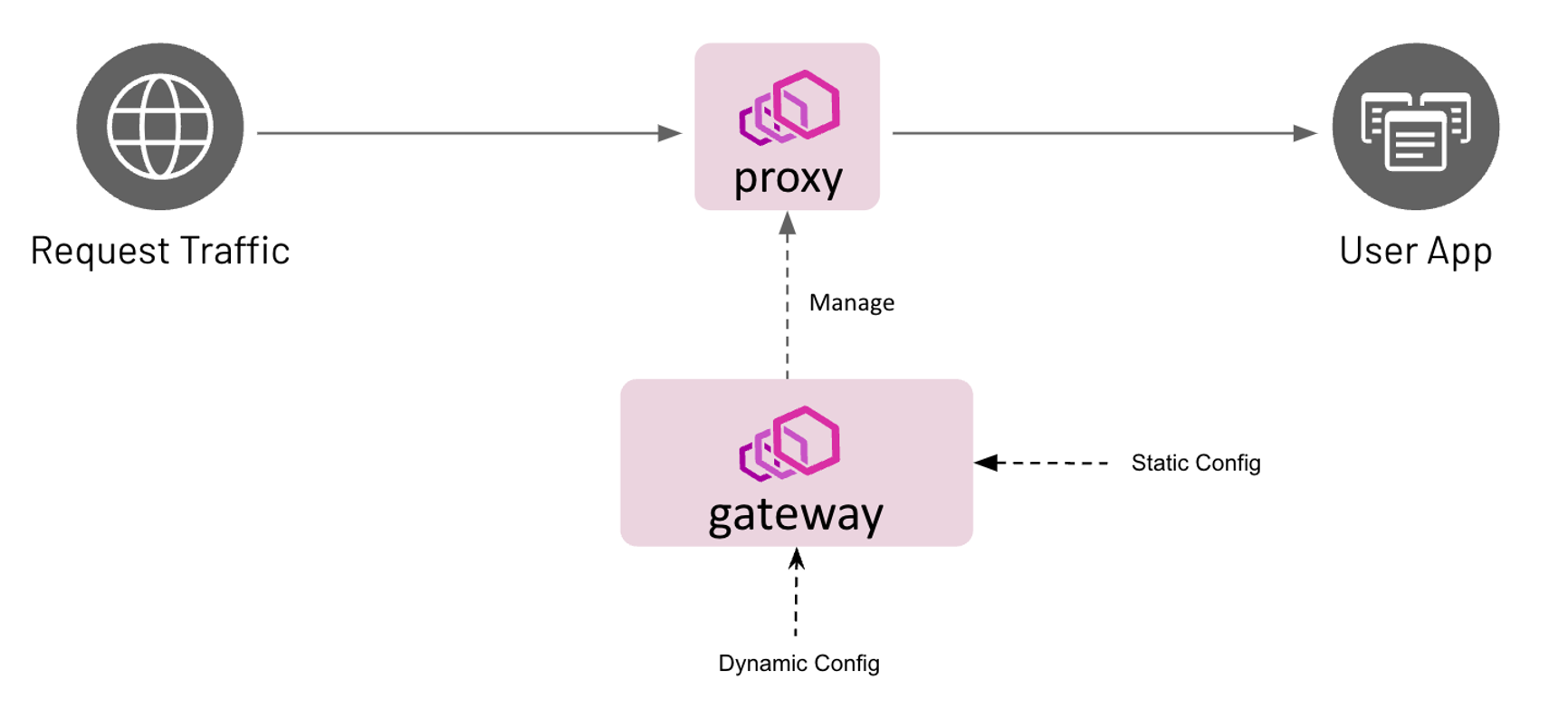

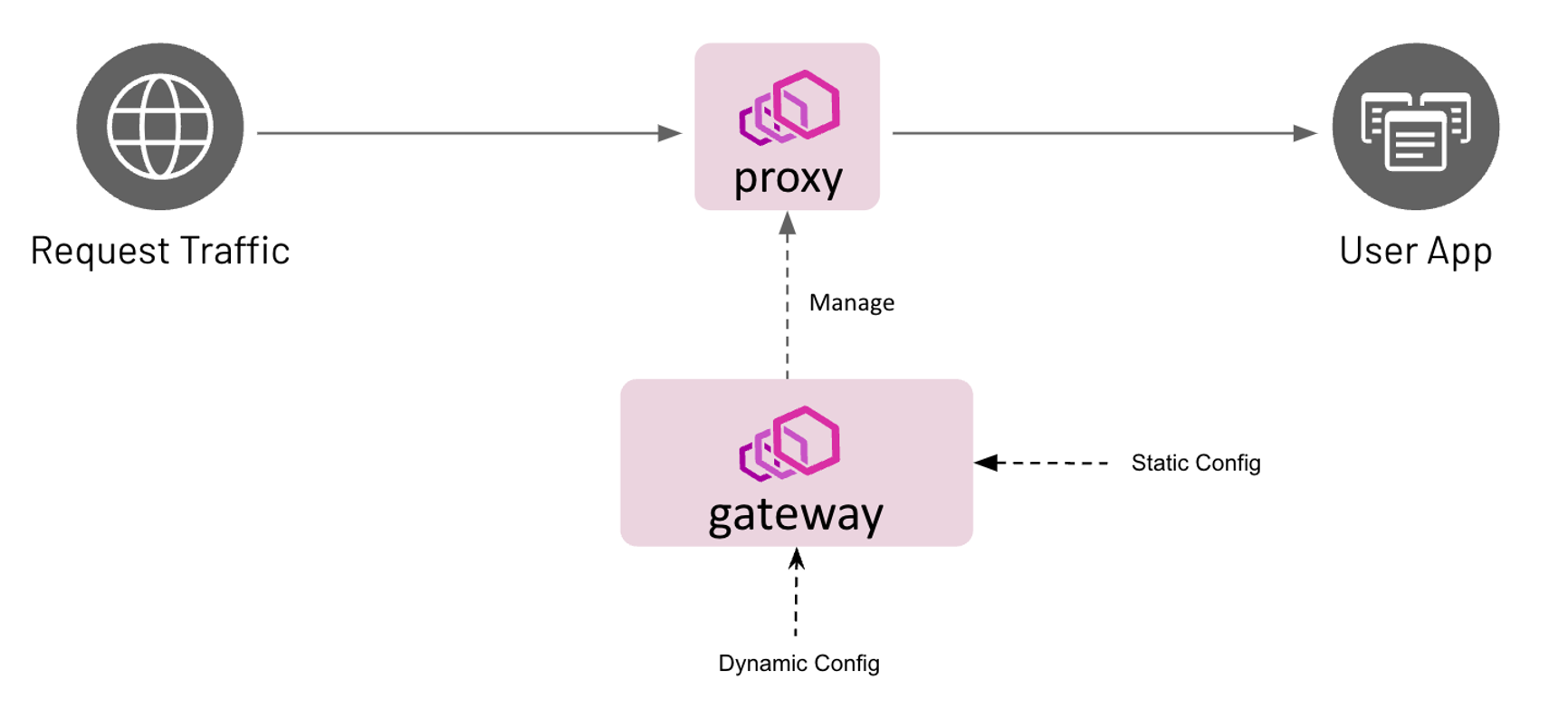

Envoy Gateway is a system made up of two main parts:

Envoy Gateway uses the Envoy Proxy, which was originally developed at Lyft. This proxy is the foundation of the Envoy project, of which Envoy Gateway is a part, and is now a graduated project within the Cloud Native Computing Foundation (CNCF).

Envoy Proxy is a high-performance, open-source proxy designed for cloud-native applications. Envoy supports use cases for edge and service proxies, routing traffic at the system’s boundary or between internal services.

The control plane uses the Kubernetes Gateway API to understand your settings and then translates them into the format Envoy Proxy needs (called xDS configuration). It also runs and updates the Envoy Proxy instances inside your Kubernetes cluster.

Load balancing distributes incoming requests across multiple backend services to improve availability, responsiveness, and scalability. Instead of directing all traffic to a single backend, which can cause slowdowns or outages, load balancing spreads the load across multiple instances, helping your applications stay fast and reliable under pressure.

Use load balancing to:

Envoy Gateway supports several load balancing strategies that determine how traffic is distributed across backend services. These strategies are configured using the BackendTrafficPolicy resource and can be applied to Gateway, HTTPRoute, or GRPCRoute resources either by directly referencing them using the targetRefs field or by dynamically selecting them using the targetSelectors field, which matches resources based on Kubernetes labels.

Supported load balancing types:

If no load balancing strategy is specified, Envoy Gateway uses Least Request by default.

This example shows how to apply the Round Robin strategy using a BackendTrafficPolicy that targets a specific HTTPRoute:

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: round-robin-policy

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: round-robin-route

loadBalancer:

type: RoundRobin

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: round-robin-route

namespace: default

spec:

parentRefs:

- name: eg

hostnames:

- "www.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: /round

backendRefs:

- name: backend

port: 3000

In this setup, traffic matching /round is distributed evenly across all available backend service instances. For example, if there are four replicas of the backend service, each one should receive roughly 25% of the requests.

Rate limiting is a technique for controlling the number of incoming requests over a defined period. It can be used to control usage for business purposes, like agreed usage quotas, or to ensure the stability of a system, preventing overload and protecting the system from, e.g., Denial of Service attacks.

Rate limiting is commonly used to:

Envoy Gateway supports two types of rate limiting:

Envoy Gateway supports rate limiting through the BackendTrafficPolicy custom resource. You can define rate-limiting rules and apply them to HTTPRoute, GRPCRoute, or Gateway resources either by directly referencing them with the targetRefs field or by dynamically selecting them using the targetSelectors field, which matches resources based on Kubernetes labels.

BackendTrafficPolicy targets a Gateway. For example, if the limit is 100r/s and a Gateway has 3 routes, each route has its own 100r/s bucket.Global rate limiting ensures a consistent request limit across the entire Envoy fleet. This is ideal for shared resources or distributed environments where coordinated enforcement is critical.

Global limits are enforced via Envoy’s external Rate Limit Service, which is automatically deployed and managed by the Envoy Gateway system. The Rate Limit Service requires a datastore component (commonly Redis). When a request is received, Envoy sends a descriptor to this external service to determine if the request should be allowed.

Benefits of global limits:

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: global-ratelimit

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: my-api

rateLimit:

type: Global

global:

rules:

- limit:

requests: 100

unit: Minute

This configuration limits all requests across all Envoy instances for the my-api route to 100 requests per minute total. If there are multiple replicas of Envoy, the limit is shared across all of them.

Local rate limiting applies limits independently within each Envoy Proxy instance. It does not rely on external services, making it lightweight and efficient—especially for blocking abusive traffic early.

Benefits of local limits:

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: local-ratelimit

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: my-api

rateLimit:

type: Local

local:

rules:

- limit:

requests: 50

unit: Minute

This configuration limits traffic to 50 requests per minute per Envoy instance for the my-api route. If there are two Envoy replicas, up to 100 total requests per minute may be allowed (50 per replica).

This “quick start” will help you get started with Envoy Gateway in a few simple steps.

A Kubernetes cluster.

Note: Refer to the Compatibility Matrix for supported Kubernetes versions.

Note: In case your Kubernetes cluster does not have a LoadBalancer implementation, we recommend installing one

so the Gateway resource has an Address associated with it. We recommend using MetalLB.

Install the Gateway API CRDs and Envoy Gateway:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v0.0.0-latest -n envoy-gateway-system --create-namespace

Wait for Envoy Gateway to become available:

kubectl wait --timeout=5m -n envoy-gateway-system deployment/envoy-gateway --for=condition=Available

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml -n default

Note: quickstart.yaml defines that Envoy Gateway will listen for

traffic on port 80 on its globally-routable IP address, to make it easy to use

browsers to test Envoy Gateway. When Envoy Gateway sees that its Listener is

using a privileged port (<1024), it will map this internally to an

unprivileged port, so that Envoy Gateway doesn’t need additional privileges.

It’s important to be aware of this mapping, since you may need to take it into

consideration when debugging.

You can also test the same functionality by sending traffic to the External IP. To get the external IP of the Envoy service, run:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

In certain environments, the load balancer may be exposed using a hostname, instead of an IP address. If so, replace

ip in the above command with hostname.

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

In this quickstart, you have:

Here is a suggested list of follow-on tasks to guide you in your exploration of Envoy Gateway:

Review the Tasks section for the scenario matching your use case. The Envoy Gateway tasks are organized by category: traffic management, security, extensibility, observability, and operations.

Use the steps in this section to uninstall everything from the quickstart.

Delete the GatewayClass, Gateway, HTTPRoute and Example App:

kubectl delete -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml --ignore-not-found=true

Delete the Gateway API CRDs and Envoy Gateway:

helm uninstall eg -n envoy-gateway-system

Envoy Gateway supports routing to native K8s resources such as Service and ServiceImport. The Backend API is a custom Envoy Gateway extension resource that can used in Gateway-API BackendObjectReference.

The Backend API was added to support several use cases:

Service and EndpointSlice resources.Similar to the K8s EndpointSlice API, the Backend API can be misused to allow traffic to be sent to otherwise restricted destinations, as described in CVE-2021-25740. A Backend resource can be used to:

DynamicResolver type, it can route traffic to any destination, effectively exposing all potential endpoints to clients. This can introduce security risks if not properly managed.For these reasons, the Backend API is disabled by default in Envoy Gateway configuration. Envoy Gateway admins are advised to follow upstream recommendations and restrict access to the Backend API using K8s RBAC.

The Backend API is currently supported only in the following BackendReferences:

The Backend API supports attachment the following policies:

Certain restrictions apply on the value of hostnames and addresses. For example, the loopback IP address range and the localhost hostname are forbidden.

Envoy Gateway does not manage the lifecycle of unix domain sockets referenced by the Backend resource. Envoy Gateway admins are responsible for creating and mounting the sockets into the envoy proxy pod. The latter can be achieved by patching the envoy deployment using the EnvoyProxy resource.

Follow the steps below to install Envoy Gateway and the example manifest. Before proceeding, you should be able to query the example backend using HTTP.

Install the Gateway API CRDs and Envoy Gateway using Helm:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v0.0.0-latest -n envoy-gateway-system --create-namespace

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml -n default

Verify Connectivity:

Get the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get

The above command should succeed with status code 200.

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Get the deployment of the Envoy service created the by the example Gateway:

export ENVOY_DEPLOYMENT=$(kubectl get deploy -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

The above command should succeed with status code 200.

By default Backend is disabled. Lets enable it in the EnvoyGateway startup configuration

The default installation of Envoy Gateway installs a default EnvoyGateway configuration and attaches it

using a ConfigMap. In the next step, we will update this resource to enable Backend.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: envoy-gateway-config

namespace: envoy-gateway-system

data:

envoy-gateway.yaml: |

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: EnvoyGateway

provider:

type: Kubernetes

gateway:

controllerName: gateway.envoyproxy.io/gatewayclass-controller

extensionApis:

enableBackend: true

EOF

Save and apply the following resource to your cluster:

---

apiVersion: v1

kind: ConfigMap

metadata:

name: envoy-gateway-config

namespace: envoy-gateway-system

data:

envoy-gateway.yaml: |

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: EnvoyGateway

provider:

type: Kubernetes

gateway:

controllerName: gateway.envoyproxy.io/gatewayclass-controller

extensionApis:

enableBackend: true

After updating the

ConfigMap, you will need to wait the configuration kicks in.

You can force the configuration to be reloaded by restarting theenvoy-gatewaydeployment.kubectl rollout restart deployment envoy-gateway -n envoy-gateway-system

Backend resource that routes to httpbin.org:80 and a HTTPRoute that references this backend.cat <<EOF | kubectl apply -f -

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: backend

spec:

parentRefs:

- name: eg

hostnames:

- "www.example.com"

rules:

- backendRefs:

- group: gateway.envoyproxy.io

kind: Backend

name: httpbin

matches:

- path:

type: PathPrefix

value: /

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: httpbin

namespace: default

spec:

endpoints:

- fqdn:

hostname: httpbin.org

port: 80

EOF

Save and apply the following resources to your cluster:

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: backend

spec:

parentRefs:

- name: eg

hostnames:

- "www.example.com"

rules:

- backendRefs:

- group: gateway.envoyproxy.io

kind: Backend

name: httpbin

matches:

- path:

type: PathPrefix

value: /

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: httpbin

namespace: default

spec:

endpoints:

- fqdn:

hostname: httpbin.org

port: 80

Get the Gateway address:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Send a request and view the response:

curl -I -HHost:www.example.com http://${GATEWAY_HOST}/headers

Envoy Gateway can be configured as a dynamic forward proxy using the Backend API by setting its type to DynamicResolver.

This allows Envoy Gateway to act as an HTTP proxy without needing prior knowledge of destination hostnames or IP addresses,

while still maintaining its advanced routing and traffic management capabilities.

Under the hood, Envoy Gateway uses the Envoy Dynamic Forward Proxy to implement this feature.

In the following example, we will create a HTTPRoute that references a Backend resource of type DynamicResolver.

This setup allows Envoy Gateway to dynamically resolve the hostname in the request and forward the traffic to the original

destination of the request.

Note: the TLS configuration in the following example is optional. It’s only required if you want to use TLS to connect

to the backend service. The example uses the system well-known CA certificate to validate the backend service’s certificate.

You can also use a custom CA certificate by specifying the caCertificate field in the tls section.

cat <<EOF | kubectl apply -f -

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: dynamic-forward-proxy

spec:

parentRefs:

- name: eg

rules:

- backendRefs:

- group: gateway.envoyproxy.io

kind: Backend

name: backend-dynamic-resolver

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: backend-dynamic-resolver

spec:

type: DynamicResolver

tls:

wellKnownCACertificates: System

EOF

Save and apply the following resources to your cluster:

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: dynamic-forward-proxy

spec:

parentRefs:

- name: eg

rules:

- backendRefs:

- group: gateway.envoyproxy.io

kind: Backend

name: backend-dynamic-resolver

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: backend-dynamic-resolver

spec:

type: DynamicResolver

tls:

wellKnownCACertificates: System

Get the Gateway address:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Send a request to gateway.envoyproxy.io and view the response:

curl -HHost:gateway.envoyproxy.io http://${GATEWAY_HOST}

You can also send a request to any other domain, and Envoy Gateway will resolve the hostname and route the traffic accordingly:

curl -HHost:httpbin.org http://${GATEWAY_HOST}/get

Envoy circuit breakers can be used to fail quickly and apply back-pressure in response to upstream service degradation.

Envoy Gateway supports the following circuit breaker thresholds:

503 status code.Envoy’s circuit breakers are distributed: counters are not synchronized across different Envoy processes. The default Envoy and Envoy Gateway circuit breaker threshold values (1024) may be too strict for high-throughput systems.

Envoy Gateway introduces a new CRD called BackendTrafficPolicy that allows the user to describe their desired circuit breaker thresholds. This instantiated resource can be linked to a Gateway, HTTPRoute or GRPCRoute resource.

Note: There are distinct circuit breaker counters for each BackendReference in an xRoute rule. Even if a BackendTrafficPolicy targets a Gateway, each BackendReference in that gateway still has separate circuit breaker counter.

Follow the steps below to install Envoy Gateway and the example manifest. Before proceeding, you should be able to query the example backend using HTTP.

Install the Gateway API CRDs and Envoy Gateway using Helm:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v0.0.0-latest -n envoy-gateway-system --create-namespace

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml -n default

Verify Connectivity:

Get the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get

The above command should succeed with status code 200.

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Get the deployment of the Envoy service created the by the example Gateway:

export ENVOY_DEPLOYMENT=$(kubectl get deploy -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

The above command should succeed with status code 200.

hey CLI will be used to generate load and measure response times. Follow the installation instruction from the Hey project docs.This example will simulate a degraded backend that responds within 10 seconds by adding the ?delay=10s query parameter to API calls. The hey tool will be used to generate 100 concurrent requests.

hey -n 100 -c 100 -host "www.example.com" http://${GATEWAY_HOST}/?delay=10s

Summary:

Total: 10.3426 secs

Slowest: 10.3420 secs

Fastest: 10.0664 secs

Average: 10.2145 secs

Requests/sec: 9.6687

Total data: 36600 bytes

Size/request: 366 bytes

Response time histogram:

10.066 [1] |■■■■

10.094 [4] |■■■■■■■■■■■■■■■

10.122 [9] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.149 [10] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.177 [10] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.204 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.232 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.259 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.287 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.314 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.342 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

The default circuit breaker threshold (1024) is not met. As a result, requests do not overflow: all requests are proxied upstream and both Envoy and clients wait for 10s.

In order to fail fast, apply a BackendTrafficPolicy that limits concurrent requests to 10 and pending requests to 0.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: circuitbreaker-for-route

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: backend

circuitBreaker:

maxPendingRequests: 0

maxParallelRequests: 10

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: circuitbreaker-for-route

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: backend

circuitBreaker:

maxPendingRequests: 0

maxParallelRequests: 10

Execute the load simulation again.

hey -n 100 -c 100 -host "www.example.com" http://${GATEWAY_HOST}/?delay=10s

Summary:

Total: 10.1230 secs

Slowest: 10.1224 secs

Fastest: 0.0529 secs

Average: 1.0677 secs

Requests/sec: 9.8785

Total data: 10940 bytes

Size/request: 109 bytes

Response time histogram:

0.053 [1] |

1.060 [89] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

2.067 [0] |

3.074 [0] |

4.081 [0] |

5.088 [0] |

6.095 [0] |

7.102 [0] |

8.109 [0] |

9.115 [0] |

10.122 [10] |■■■■

With the new circuit breaker settings, and due to the slowness of the backend, only the first 10 concurrent requests were proxied, while the other 90 overflowed.

This task explains the usage of the ClientTrafficPolicy API.

The ClientTrafficPolicy API allows system administrators to configure the behavior for how the Envoy Proxy server behaves with downstream clients.

This API was added as a new policy attachment resource that can be applied to Gateway resources and it is meant to hold settings for configuring behavior of the connection between the downstream client and Envoy Proxy listener. It is the counterpart to the BackendTrafficPolicy API resource.

Follow the steps below to install Envoy Gateway and the example manifest. Before proceeding, you should be able to query the example backend using HTTP.

Install the Gateway API CRDs and Envoy Gateway using Helm:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v0.0.0-latest -n envoy-gateway-system --create-namespace

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml -n default

Verify Connectivity:

Get the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get

The above command should succeed with status code 200.

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Get the deployment of the Envoy service created the by the example Gateway:

export ENVOY_DEPLOYMENT=$(kubectl get deploy -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

The above command should succeed with status code 200.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: enable-tcp-keepalive-policy

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

tcpKeepalive:

idleTime: 20m

interval: 60s

probes: 3

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: enable-tcp-keepalive-policy

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

tcpKeepalive:

idleTime: 20m

interval: 60s

probes: 3

Verify that ClientTrafficPolicy is Accepted:

kubectl get clienttrafficpolicies.gateway.envoyproxy.io -n default

You should see the policy marked as accepted like this:

NAME STATUS AGE

enable-tcp-keepalive-policy Accepted 5s

Curl the example app through Envoy proxy once again:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get --next --header "Host: www.example.com" http://$GATEWAY_HOST/get

You should see the output like this:

* Trying 172.18.255.202:80...

* Connected to 172.18.255.202 (172.18.255.202) port 80 (#0)

> GET /get HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.1.2

> Accept: */*

>

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< date: Fri, 01 Dec 2023 10:17:04 GMT

< content-length: 507

< x-envoy-upstream-service-time: 0

< server: envoy

<

{

"path": "/get",

"host": "www.example.com",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.1.2"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"15000"

],

"X-Envoy-Internal": [

"true"

],

"X-Forwarded-For": [

"172.18.0.2"

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"4d0d33e8-d611-41f0-9da0-6458eec20fa5"

]

},

"namespace": "default",

"ingress": "",

"service": "",

"pod": "backend-58d58f745-2zwvn"

* Connection #0 to host 172.18.255.202 left intact

}* Found bundle for host: 0x7fb9f5204ea0 [serially]

* Can not multiplex, even if we wanted to

* Re-using existing connection #0 with host 172.18.255.202

> GET /headers HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.1.2

> Accept: */*

>

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< date: Fri, 01 Dec 2023 10:17:04 GMT

< content-length: 511

< x-envoy-upstream-service-time: 0

< server: envoy

<

{

"path": "/headers",

"host": "www.example.com",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.1.2"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"15000"

],

"X-Envoy-Internal": [

"true"

],

"X-Forwarded-For": [

"172.18.0.2"

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"9a8874c0-c117-481c-9b04-933571732ca5"

]

},

"namespace": "default",

"ingress": "",

"service": "",

"pod": "backend-58d58f745-2zwvn"

* Connection #0 to host 172.18.255.202 left intact

}

You can see keepalive connection marked by the output in:

* Connection #0 to host 172.18.255.202 left intact

* Re-using existing connection #0 with host 172.18.255.202

This example configures Proxy Protocol for downstream clients.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: enable-proxy-protocol-policy

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

enableProxyProtocol: true

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: enable-proxy-protocol-policy

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

enableProxyProtocol: true

Verify that ClientTrafficPolicy is Accepted:

kubectl get clienttrafficpolicies.gateway.envoyproxy.io -n default

You should see the policy marked as accepted like this:

NAME STATUS AGE

enable-proxy-protocol-policy Accepted 5s

Try the endpoint without using PROXY protocol with curl:

curl -v --header "Host: www.example.com" http://$GATEWAY_HOST/get

* Trying 172.18.255.202:80...

* Connected to 172.18.255.202 (172.18.255.202) port 80 (#0)

> GET /get HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.1.2

> Accept: */*

>

* Recv failure: Connection reset by peer

* Closing connection 0

curl: (56) Recv failure: Connection reset by peer

Curl the example app through Envoy proxy once again, now sending HAProxy PROXY protocol header at the beginning of the connection with –haproxy-protocol flag:

curl --verbose --haproxy-protocol --header "Host: www.example.com" http://$GATEWAY_HOST/get

You should now expect 200 response status and also see that source IP was preserved in the X-Forwarded-For header.

* Trying 172.18.255.202:80...

* Connected to 172.18.255.202 (172.18.255.202) port 80 (#0)

> GET /get HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.1.2

> Accept: */*

>

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< date: Mon, 04 Dec 2023 21:11:43 GMT

< content-length: 510

< x-envoy-upstream-service-time: 0

< server: envoy

<

{

"path": "/get",

"host": "www.example.com",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.1.2"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"15000"

],

"X-Envoy-Internal": [

"true"

],

"X-Forwarded-For": [

"192.168.255.6"

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"290e4b61-44b7-4e5c-a39c-0ec76784e897"

]

},

"namespace": "default",

"ingress": "",

"service": "",

"pod": "backend-58d58f745-2zwvn"

* Connection #0 to host 172.18.255.202 left intact

}

This example configures the number of additional ingress proxy hops from the right side of XFF HTTP headers to trust when determining the origin client’s IP address and determines whether or not x-forwarded-proto headers will be trusted. Refer to https://www.envoyproxy.io/docs/envoy/latest/configuration/http/http_conn_man/headers#x-forwarded-for for details.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: http-client-ip-detection

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

clientIPDetection:

xForwardedFor:

numTrustedHops: 2

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: http-client-ip-detection

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

clientIPDetection:

xForwardedFor:

numTrustedHops: 2

Verify that ClientTrafficPolicy is Accepted:

kubectl get clienttrafficpolicies.gateway.envoyproxy.io -n default

You should see the policy marked as accepted like this:

NAME STATUS AGE

http-client-ip-detection Accepted 5s

Open port-forward to the admin interface port:

kubectl port-forward deploy/${ENVOY_DEPLOYMENT} -n envoy-gateway-system 19000:19000

Curl the admin interface port to fetch the configured value for xff_num_trusted_hops:

curl -s 'http://localhost:19000/config_dump?resource=dynamic_listeners' \

| jq -r '.configs[1].active_state.listener.default_filter_chain.filters[0].typed_config

| {use_remote_address, original_ip_detection_extensions}'

You should expect to see the following:

{

"use_remote_address": false,

"original_ip_detection_extensions": [

{

"name": "envoy.extensions.http.original_ip_detection.xff",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.http.original_ip_detection.xff.v3.XffConfig",

"xff_num_trusted_hops": 1,

"skip_xff_append": false

}

}

]

}

Curl the example app through Envoy proxy:

curl -v http://$GATEWAY_HOST/get \

-H "Host: www.example.com" \

-H "X-Forwarded-Proto: https" \

-H "X-Forwarded-For: 1.1.1.1,2.2.2.2"

You should expect 200 response status, see that X-Forwarded-Proto was preserved and X-Envoy-External-Address was set to the leftmost address in the X-Forwarded-For header:

* Trying [::1]:8888...

* Connected to localhost (::1) port 8888

> GET /get HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.4.0

> Accept: */*

> X-Forwarded-Proto: https

> X-Forwarded-For: 1.1.1.1,2.2.2.2

>

Handling connection for 8888

< HTTP/1.1 200 OK

< content-type: application/json

< x-content-type-options: nosniff

< date: Tue, 30 Jan 2024 15:19:22 GMT

< content-length: 535

< x-envoy-upstream-service-time: 0

< server: envoy

<

{

"path": "/get",

"host": "www.example.com",

"method": "GET",

"proto": "HTTP/1.1",

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.4.0"

],

"X-Envoy-Expected-Rq-Timeout-Ms": [

"15000"

],

"X-Envoy-External-Address": [

"1.1.1.1"

],

"X-Forwarded-For": [

"1.1.1.1,2.2.2.2,10.244.0.9"

],

"X-Forwarded-Proto": [

"https"

],

"X-Request-Id": [

"53ccfad7-1899-40fa-9322-ddb833aa1ac3"

]

},

"namespace": "default",

"ingress": "",

"service": "",

"pod": "backend-58d58f745-8psnc"

* Connection #0 to host localhost left intact

}

This feature allows you to limit the time taken by the Envoy Proxy fleet to receive the entire request from the client, which is useful in preventing certain clients from consuming too much memory in Envoy This example configures the HTTP request timeout for the client, please check out the details here.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: client-timeout

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

timeout:

http:

requestReceivedTimeout: 2s

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: client-timeout

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

timeout:

http:

requestReceivedTimeout: 2s

Curl the example app through Envoy proxy:

curl -v http://$GATEWAY_HOST/get \

-H "Host: www.example.com" \

-H "Content-Length: 10000"

You should expect 428 response status after 2s:

curl -v http://$GATEWAY_HOST/get \

-H "Host: www.example.com" \

-H "Content-Length: 10000"

* Trying 172.18.255.200:80...

* Connected to 172.18.255.200 (172.18.255.200) port 80

> GET /get HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.4.0

> Accept: */*

> Content-Length: 10000

>

< HTTP/1.1 408 Request Timeout

< content-length: 15

< content-type: text/plain

< date: Tue, 27 Feb 2024 07:38:27 GMT

< connection: close

<

* Closing connection

request timeout

The idle timeout is defined as the period in which there are no active requests. When the idle timeout is reached the connection will be closed. For more details see here.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: client-timeout

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

timeout:

http:

idleTimeout: 5s

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: client-timeout

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

timeout:

http:

idleTimeout: 5s

Curl the example app through Envoy proxy:

openssl s_client -crlf -connect $GATEWAY_HOST:443

You should expect the connection to be closed after 5s.

You can also check the number of connections closed due to idle timeout by using the following query:

envoy_http_downstream_cx_idle_timeout{envoy_http_conn_manager_prefix="<name of connection manager>"}

The number of connections closed due to idle timeout should be increased by 1.

This feature allows you to set a soft limit on size of the listener’s new connection read and write buffers.

The size is configured using the resource.Quantity format, see examples here.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: client-timeout

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

connection:

bufferLimit: 1024

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: client-timeout

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

connection:

bufferLimit: 1024

The connection limit features allows users to limit the number of concurrently active TCP connections on a Gateway or a Listener. When the connection limit is reached, new connections are closed immediately by Envoy proxy. It’s possible to configure a delay for connection rejection.

Users may want to limit the number of connections for several reasons:

Envoy Gateway introduces a new CRD called Client Traffic Policy that allows the user to describe their desired connection limit settings. This instantiated resource can be linked to a Gateway.

The Envoy connection limit implementation is distributed: counters are not synchronized between different envoy proxies.

When a Client Traffic Policy is attached to a gateway, the connection limit will apply differently based on the Listener protocol in use:

Follow the steps below to install Envoy Gateway and the example manifest. Before proceeding, you should be able to query the example backend using HTTP.

Install the Gateway API CRDs and Envoy Gateway using Helm:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v0.0.0-latest -n envoy-gateway-system --create-namespace

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml -n default

Verify Connectivity:

Get the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get

The above command should succeed with status code 200.

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Get the deployment of the Envoy service created the by the example Gateway:

export ENVOY_DEPLOYMENT=$(kubectl get deploy -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

The above command should succeed with status code 200.

hey CLI will be used to generate load and measure response times. Follow the installation instruction from the Hey project docs.This example we use hey to open 10 connections and execute 1 RPS per connection for 10 seconds.

hey -c 10 -q 1 -z 10s -host "www.example.com" http://${GATEWAY_HOST}/get

Summary:

Total: 10.0058 secs

Slowest: 0.0275 secs

Fastest: 0.0029 secs

Average: 0.0111 secs

Requests/sec: 9.9942

[...]

Status code distribution:

[200] 100 responses

There are no connection limits, and so all 100 requests succeed.

Next, we apply a limit of 5 connections.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: connection-limit-ctp

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

connection:

connectionLimit:

value: 5

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: ClientTrafficPolicy

metadata:

name: connection-limit-ctp

namespace: default

spec:

targetRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

connection:

connectionLimit:

value: 5

Execute the load simulation again.

hey -c 10 -q 1 -z 10s -host "www.example.com" http://${GATEWAY_HOST}/get

Summary:

Total: 11.0327 secs

Slowest: 0.0361 secs

Fastest: 0.0013 secs

Average: 0.0088 secs

Requests/sec: 9.0640

[...]

Status code distribution:

[200] 50 responses

Error distribution:

[50] Get "http://localhost:8888/get": EOF

With the new connection limit, only 5 of 10 connections are established, and so only 50 requests succeed.

Direct responses are valuable in cases where you want the gateway itself to handle certain requests without forwarding them to backend services. This task shows you how to configure them.

Follow the steps from the Quickstart to install Envoy Gateway and the example manifest. Before proceeding, you should be able to query the example backend using HTTP.

Note: the size of the response body (whether provided in-line or via a reference) cannot exceed 4096 bytes.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: direct-response

spec:

parentRefs:

- name: eg

hostnames:

- "www.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: /inline

filters:

- type: ExtensionRef

extensionRef:

group: gateway.envoyproxy.io

kind: HTTPRouteFilter

name: direct-response-inline

- matches:

- path:

type: PathPrefix

value: /value-ref

filters:

- type: ExtensionRef

extensionRef:

group: gateway.envoyproxy.io

kind: HTTPRouteFilter

name: direct-response-value-ref

---

apiVersion: v1

kind: ConfigMap

metadata:

name: value-ref-response

data:

response.body: '{"error": "Internal Server Error"}'

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: HTTPRouteFilter

metadata:

name: direct-response-inline

spec:

directResponse:

contentType: text/plain

statusCode: 503

body:

type: Inline

inline: "Oops! Your request is not found."

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: HTTPRouteFilter

metadata:

name: direct-response-value-ref

spec:

directResponse:

contentType: application/json

statusCode: 500

body:

type: ValueRef

valueRef:

group: ""

kind: ConfigMap

name: value-ref-response

EOF

Save and apply the following resource to your cluster:

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: direct-response

spec:

parentRefs:

- name: eg

hostnames:

- "www.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: /inline

filters:

- type: ExtensionRef

extensionRef:

group: gateway.envoyproxy.io

kind: HTTPRouteFilter

name: direct-response-inline

- matches:

- path:

type: PathPrefix

value: /value-ref

filters:

- type: ExtensionRef

extensionRef:

group: gateway.envoyproxy.io

kind: HTTPRouteFilter

name: direct-response-value-ref

---

apiVersion: v1

kind: ConfigMap

metadata:

name: value-ref-response

data:

response.body: '{"error": "Internal Server Error"}'

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: HTTPRouteFilter

metadata:

name: direct-response-inline

spec:

directResponse:

contentType: text/plain

statusCode: 503

body:

type: Inline

inline: "Oops! Your request is not found."

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: HTTPRouteFilter

metadata:

name: direct-response-value-ref

spec:

directResponse:

contentType: application/json

statusCode: 500

body:

type: ValueRef

valueRef:

group: ""

kind: ConfigMap

name: value-ref-response

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/inline

* Trying 127.0.0.1:80...

* Connected to 127.0.0.1 (127.0.0.1) port 80

> GET /inline HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.4.0

> Accept: */*

>

< HTTP/1.1 503 Service Unavailable

< content-type: text/plain

< content-length: 32

< date: Sat, 02 Nov 2024 00:35:48 GMT

<

* Connection #0 to host 127.0.0.1 left intact

Oops! Your request is not found.

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/value-ref

* Trying 127.0.0.1:80...

* Connected to 127.0.0.1 (127.0.0.1) port 80

> GET /value-ref HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.4.0

> Accept: */*

>

< HTTP/1.1 500 Internal Server Error

< content-type: application/json

< content-length: 34

< date: Sat, 02 Nov 2024 00:35:55 GMT

<

* Connection #0 to host 127.0.0.1 left intact

{"error": "Internal Server Error"}

Active-passive failover in an API gateway setup is like having a backup plan in place to keep things running smoothly if something goes wrong. Here’s why it’s valuable:

Staying Online: When the main (or “active”) backend has issues or goes offline, the fallback (or “passive”) backend is ready to step in instantly. This helps keep your API accessible and your services running, so users don’t even notice any interruptions.

Automatic Switch Over: If a problem occurs, the system can automatically switch traffic over to the fallback backend. This avoids needing someone to jump in and fix things manually, which could take time and might even lead to mistakes.

Lower Costs: In an active-passive setup, the fallback backend doesn’t need to work all the time—it’s just on standby. This can save on costs (like cloud egress costs) compared to setups where both backend are running at full capacity.

Peace of Mind with Redundancy: Although the fallback backend isn’t handling traffic daily, it’s there as a safety net. If something happens with the primary backend, the backup can take over immediately, ensuring your service doesn’t skip a beat.

Follow the steps below to install Envoy Gateway and the example manifest. Before proceeding, you should be able to query the example backend using HTTP.

Install the Gateway API CRDs and Envoy Gateway using Helm:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v0.0.0-latest -n envoy-gateway-system --create-namespace

Install the GatewayClass, Gateway, HTTPRoute and example app:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/latest/quickstart.yaml -n default

Verify Connectivity:

Get the External IP of the Gateway:

export GATEWAY_HOST=$(kubectl get gateway/eg -o jsonpath='{.status.addresses[0].value}')

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://$GATEWAY_HOST/get

The above command should succeed with status code 200.

Get the name of the Envoy service created the by the example Gateway:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Get the deployment of the Envoy service created the by the example Gateway:

export ENVOY_DEPLOYMENT=$(kubectl get deploy -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

Port forward to the Envoy service:

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &

Curl the example app through Envoy proxy:

curl --verbose --header "Host: www.example.com" http://localhost:8888/get

The above command should succeed with status code 200.

active and passive, representing an active and passive backend application.cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: active

labels:

app: active

service: active

spec:

ports:

- name: http

port: 3000

targetPort: 3000

selector:

app: active

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: active

spec:

replicas: 1

selector:

matchLabels:

app: active

version: v1

template:

metadata:

labels:

app: active

version: v1

spec:

containers:

- image: gcr.io/k8s-staging-gateway-api/echo-basic:v20231214-v1.0.0-140-gf544a46e

imagePullPolicy: IfNotPresent

name: active

ports:

- containerPort: 3000

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

---

apiVersion: v1

kind: Service

metadata:

name: passive

labels:

app: passive

service: passive

spec:

ports:

- name: http

port: 3000

targetPort: 3000

selector:

app: passive

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: passive

spec:

replicas: 1

selector:

matchLabels:

app: passive

version: v1

template:

metadata:

labels:

app: passive

version: v1

spec:

containers:

- image: gcr.io/k8s-staging-gateway-api/echo-basic:v20231214-v1.0.0-140-gf544a46e

imagePullPolicy: IfNotPresent

name: passive

ports:

- containerPort: 3000

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

EOF

Save and apply the following resource to your cluster:

apiVersion: v1

kind: Service

metadata:

name: active

labels:

app: active

service: active

spec:

ports:

- name: http

port: 3000

targetPort: 3000

selector:

app: active

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: active

spec:

replicas: 1

selector:

matchLabels:

app: active

version: v1

template:

metadata:

labels:

app: active

version: v1

spec:

containers:

- image: gcr.io/k8s-staging-gateway-api/echo-basic:v20231214-v1.0.0-140-gf544a46e

imagePullPolicy: IfNotPresent

name: active

ports:

- containerPort: 3000

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

---

apiVersion: v1

kind: Service

metadata:

name: passive

labels:

app: passive

service: passive

spec:

ports:

- name: http

port: 3000

targetPort: 3000

selector:

app: passive

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: passive

spec:

replicas: 1

selector:

matchLabels:

app: passive

version: v1

template:

metadata:

labels:

app: passive

version: v1

spec:

containers:

- image: gcr.io/k8s-staging-gateway-api/echo-basic:v20231214-v1.0.0-140-gf544a46e

imagePullPolicy: IfNotPresent

name: passive

ports:

- containerPort: 3000

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

Follow the instructions here to enable the Backend API

Create two Backend resources that are used to represent the active backend and passive backend.

Note, we’ve set fallback: true for the passive backend to indicate its a passive backend

cat <<EOF | kubectl apply -f -

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: passive

spec:

fallback: true

endpoints:

- fqdn:

hostname: passive.default.svc.cluster.local

port: 3000

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: active

spec:

endpoints:

- fqdn:

hostname: active.default.svc.cluster.local

port: 3000

---

EOF

Save and apply the following resources to your cluster:

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: passive

spec:

fallback: true

endpoints:

- fqdn:

hostname: passive.default.svc.cluster.local

port: 3000

---

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: Backend

metadata:

name: active

spec:

endpoints:

- fqdn:

hostname: active.default.svc.cluster.local

port: 3000

---

cat <<EOF | kubectl apply -f -

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: ha-example

namespace: default

spec:

hostnames:

- www.example.com

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: eg

namespace: default

rules:

- backendRefs:

- group: gateway.envoyproxy.io

kind: Backend

name: active

namespace: default

port: 3000

- group: gateway.envoyproxy.io

kind: Backend

name: passive

namespace: default

port: 3000

matches:

- path:

type: PathPrefix

value: /test

EOF

Save and apply the following resources to your cluster:

---